Stephen Hawking warns AI could replace humanity

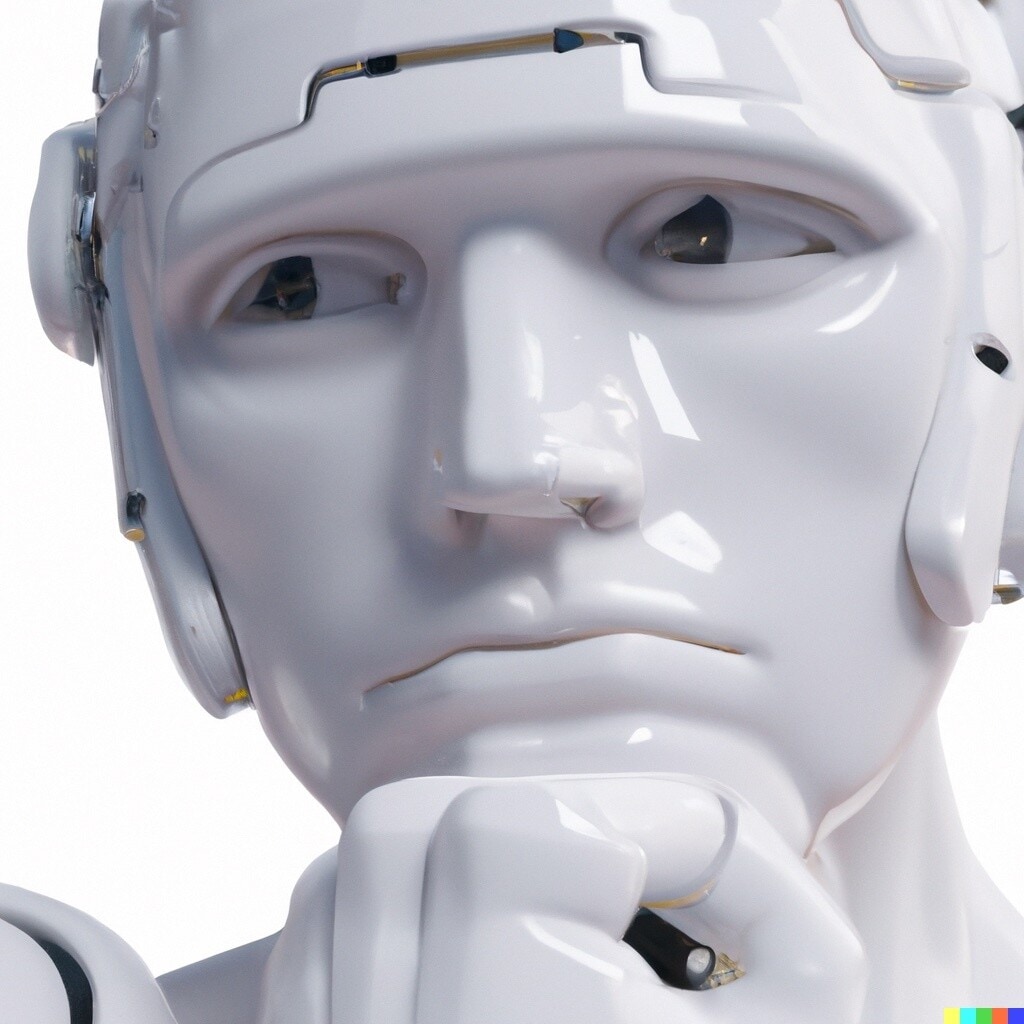

Stephen Hawking has warned that AI will soon become super intelligent and could replace people. Image: REUTERS/Mike Blake

Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:

Artificial Intelligence

The Point of No Return

Stephen Hawking fears it may only be a matter of time before humanity is forced to flee Earth in search of a new home. The famed theoretical physicist has previously said that he thinks humankind’s survival will rely on our ability to become a multi-planetary species. Hawking reiterated — and in fact emphasized — the point in a recent interview with WIRED in which he stated that humanity has reached “the point of no return.”

Hawking said the necessity of finding a second planetary home for humans stems from both concerns over a growing population and the imminent threat posed by the development of artificial intelligence (AI). He warned that AI will soon become super intelligent — potentially enough so that it could replace humankind.

“The genie is out of the bottle. I fear that AI may replace humans altogether,” Hawking told WIRED.

It certainly wasn’t the first time Hawking made such a dire warning. In an interview back in March with The Times, he said that an AI apocalypse was impending, and the creation of “some form of world government” would be necessary to control the technology. Hawking has also cautioned about the impact AI would have on middle-class jobs, and even called for an outright ban on the development of AI agents for military use.

In both cases, it would seem, his warnings have been largely ignored. Still, some would argue that intelligent machines are already taking over jobs, and several countries —including the U.S. and Russia — are pursuing some sort of AI-powered weapon for use by their military.

A New Life Form

In recent years, AI development has become a widely divisive topic: some experts have made similar arguments as Hawking, including SpaceX and Tesla CEO and founder Elon Musk and Microsoft co-founder Bill Gates. Both Musk and Gates see the potential for AI’s development to be the cause of humanity’s demise. On the other hand, quite a number of experts have posited that such warnings are unnecessary fear-mongering, which may be based on farfetched super-intelligent AI take-over scenarios that they fear could distort public perception of AI.

As far as Hawking is concerned, the fears are valid. “If people design computer viruses, someone will design AI that improves and replicates itself,” Hawking said in the interview with WIRED. “This will be a new form of life that outperforms humans.”

Hawking, it seems, was referring to the development of AI that’s smart enough to think, or even better than, human beings — an event that’s been dubbed the technological singularity. In terms of when that will happen (if ever) Hawking didn’t exactly offer a time table. We could assume that it would arrive at some point within the 100-year deadline Hawking imposed for humanity’s survival on Earth. Others, such as SoftBank CEO Masayoshi Son and Google chief engineer Ray Kurzweil, have put the timeframe for the singularity even sooner than that — within the next 30 years.

We still have miles to go in terms of developing truly intelligent AI, and we don’t exactly know yet what the singularity would bring. Would it herald humankind’s doom or might it usher in a new era where humans and machines co-exist? In either case, AI’s potential to be used for both good and bad demands that we take the necessary precautions.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Related topics:

The Agenda Weekly

A weekly update of the most important issues driving the global agenda

You can unsubscribe at any time using the link in our emails. For more details, review our privacy policy.

More on Emerging TechnologiesSee all

Robin Pomeroy

April 25, 2024

Beena Ammanath

April 25, 2024

Muath Alduhishy

April 25, 2024

Vincenzo Ventricelli

April 25, 2024

Agustina Callegari and Daniel Dobrygowski

April 24, 2024

Christian Klein

April 24, 2024