Why AI is failing the next generation of women

Biased algorithms risk betraying young women as they enter the job market Image: REUTERS/Stringer

Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:

Artificial Intelligence

Artificial Intelligence is either a silver bullet for every problem on the planet, or the guaranteed cause of the apocalypse, depending on whom you speak to.

The reality is likely to be far more mundane. AI is a tool, and like many technological breakthroughs before it, it will be used for good and for bad. But focusing on potential extreme scenarios doesn’t help with our present reality. AI is increasingly being used to influence the products we buy and the music and films we enjoy; to protect our money; and, controversially, to make hiring decisions and process criminal behaviour.

The major problem with AI is what’s known as ‘garbage in, garbage out’. We feed algorithms data that introduces existing biases, which then become self-fulfilling. In the case of recruitment, a firm that has historically hired male candidates will find that their AI rejects female candidates, as they don’t fit the mould of past successful applicants. In the case of crime or recidivism prediction, algorithms are picking up on historical and societal biases and further propagating them.

In some ways, it’s a chicken and egg problem. The Western world has been digitized for longer, so there are more records for AIs to parse. In addition, women have been under-represented in many walks of life, so there is less data, and what data exists is often of a lower quality. If we can’t feed AIs quality data that is free of bias, they will learn and continue the prejudices we seek to eliminate. Often the largest datasets available are also simply of such low quality that the results are unpredictable and unexpected, such as racist chatbots on Twitter.

None of this is to say that anything about AI is inherently bad. It has the potential to be a better decision-making tool than people. AI can’t be bribed, cheated or socially engineered. It doesn’t get tired, hungry or mad. So what’s the answer?

In the short term, we need to develop standards and testing for AI that enable us to identify bias and work against it. These need to be independently agreed upon and rigorously tested, as understanding what’s happening behind the scenes in machine learning is incredibly complex and difficult.

We also need to think about the user-facing experience of AI and the inherent sexism of personal assistants and chatbots that are, by and large, women. Whereas perhaps the first well-known automated assistant was male (HAL-9000), it seems that today we can only picture our automated assistants as a subservient woman. Often this is defended by consumer research demonstrating that consumers simply prefer a female voice, but how much of this is due to gender stereotypes around the types of role we use assistants for?

We have an opportunity to address these imbalances by driving a greater focus on inclusion, empowerment and equality. More women working in the technology industry, writing algorithms and feeding into product development will change how we imagine and develop technology, and how it sounds and looks.

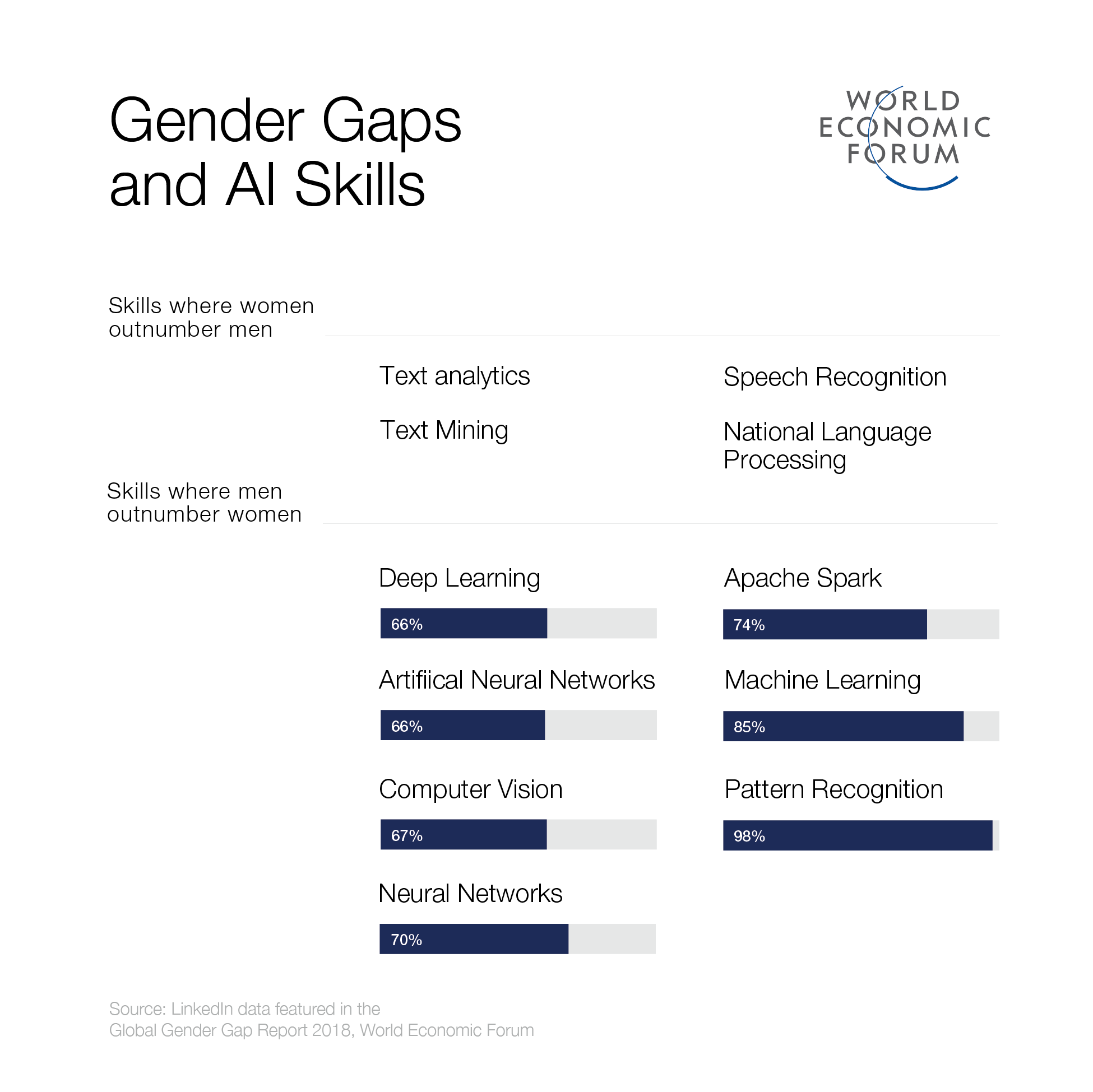

However, it’s a tough nut to crack. Technology and engineering are historically two of the most male-dominated workforces on the planet, and unconscious gender bias (as well as a reasonable amount of conscious bias) is rampant. Even though we’ve seen that the presence of women on boards can bolster performance, generate new ideas and help companies weather times of crisis, often male candidates who have been CEOs at small firms are prioritized over women who have overseen entire divisions at large companies, and who arguably have greater and more relevant experience.

Good work is being done to introduce girls to technology skills, encourage them to pursue further education in STEM subjects and apply for roles in male-dominated fields, but until top-down change can be implemented, progress will be slow. There is a real risk that without intervention today, this next generation of women will be denied the opportunities we are skilling and training them for, because biased algorithms will simply sideline them in favour of propagating the status quo.

I am a great believer in the power of programmes such as Girls in AI to ensure the next generation of workers have the skills needed to create impactful change. But we need industry-level agreement in order to clear the path for them. We need standards and testing, both in terms of the quality of data we rely on and in terms of detecting bias, and a commitment to re-examine the policies and incentives in place at technology companies, in order to get more women onboard and accelerate the rate of change.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda Weekly

A weekly update of the most important issues driving the global agenda

You can unsubscribe at any time using the link in our emails. For more details, review our privacy policy.

More on Artificial IntelligenceSee all

Darko Matovski

April 11, 2024

Juliana Guaqueta Ospina

April 11, 2024

Allan Millington

April 10, 2024

Robin Pomeroy and Sophia Akram

April 8, 2024