Is AI trustworthy enough to help us fight COVID-19?

To use AI to respond to COVID-19, we first must ask the right questions. Image: REUTERS/Gleb Garanich

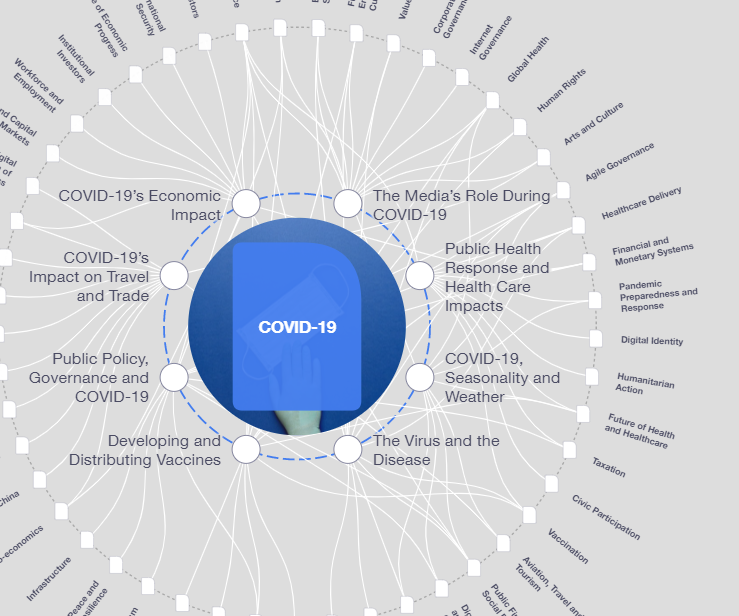

Explore and monitor how COVID-19 is affecting economies, industries and global issues

Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:

COVID-19

- Artificial intelligence (AI) could help us respond to the COVID-19 pandemic.

- But first, we must ask serious questions about reliability, safety and fairness.

- Here's how we can co-design an audit framework for AI systems.

Imagine a hypothetical scenario: a head of state meets with the founders of a health-tech startup that claims to have designed an artificial intelligence (AI)-powered application that can predict which COVID-19 patients are more at risk of developing severe respiratory diseases. The stakes are high from both a health and an economic perspective. Plus, time is short.

What should happen next?

As confirmed cases pass 1 million worldwide, such an application would be a welcome tool. It could support physicians in the bed-allocation decision-making process and improve the flow management of patients. When combined with mass testing at identified infection sites and strategic locations including airports and train stations, such a tool could ideally assist in preventing overcrowding in hospitals and help loosen social distancing rules.

No such tool is currently in use, though a group of New York University researchers is currently working on something similar. Nevertheless, let’s continue with this thought experiment to grasp the governance challenges associated with artificial intelligence, and why auditing for accountability matters in times of crisis.

Due diligence starts with asking the right questions.

Any leader faced with a new technological solution like this hypothetical app must ask three main questions. First, is the app reliable? Second, is it safe? Finally is it fair? In turn, these questions raise a myriad of supporting questions regarding the definitions of reliability, safety and fairness being considered, as well as their assessment metrics.

Ideally, the answers to these questions should enable the audit of such an AI-system, meaning that a research committee, or any appointed competent third party, should be able to evaluate whether the system is trustworthy by examining the appropriate documentation.

Unfortunately, gaining access to – and reviewing – such documentation may be challenging in practice. The difficulties presented are largely related to deep learning (DL) – a subclass of machine learning – and the degree of maturity of the AI industry.

Machine-learning is a powerful but limited statistical technique.

At its core, deep learning (DL) is a statistical technique for classifying patterns – based on sample data – using neural networks with multiple layers. By leveraging the exponential growth of human and machine-generated data, DL systems have achieved impressive breakthroughs in various domains, especially computer vision and speech recognition.

These results are remarkable in important respects but limited in others. For one thing, deep learning systems are notoriously opaque. Indeed, their logic cannot be easily interpreted using plain language. If we’re going to rely on automated systems to assist in making critical decisions, such as deciding which patient requires critical care, the systems must be understandable by both physicians and patients. In practice, this requires including their perspectives in the planning, design and development of such an app so as to enable them to be part of the decision-making process.

Furthermore, data is central to DL’s learning process. DL knowledge is primarily drawn from the patterns identified in their training sets, usually labeled data, which are the initial set of clinical data used to train their predictive model. This, in turn, creates privacy and data integrity challenges: ensuring the maintenance and protection of accurate labeled clinical data is quite difficult.

There is no standardized process for documenting AI services.

More fundamentally, what does “appropriate” mean when evaluating AI? Is it information about a training dataset? A description of the model, including performance characteristics and potential pitfalls? What about a comprehensive risk assessment framework that incorporates bias-mitigation strategies?

Addressing this question is particularly difficult partly because the AI industry community currently has no standardized process for documenting AI systems. As opposed to the software industry, where companies have long-established processes created to create transparency and accountability with their services (including design documents, performance tests and cybersecurity protocols), AI practitioners are still trying to figure out what these processes should be. This lack of standardized protocols is a reminder and an expression of the youth of this field.

What is the World Economic Forum doing about the coronavirus outbreak?

The way forward

Considering the rapid adoption of AI in high-stakes domains, the question of “how do we ensure trustworthy use of AI through audit frameworks?” is too important to be left to the industry. On the other hand, its responses vary a lot depending on the use-case being considered and requires a sound understanding of the system introduced and therefore cannot be addressed by policymakers alone.

Only a genuine multistakeholder process could provide satisfactory answers. At the World Economic Forum, we’ve taken concrete steps toward this very goal: building a community of policymakers, civil society, industry actors and academia that is co-designing an audit framework for AI systems.

Only together can we build an AI for COVID-19 patients that is meaningfully auditable and helps the world withstand and recover from global risks.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Related topics:

The Agenda Weekly

A weekly update of the most important issues driving the global agenda

You can unsubscribe at any time using the link in our emails. For more details, review our privacy policy.

More on Health and Healthcare SystemsSee all

Shyam Bishen

April 24, 2024

Shyam Bishen and Annika Green

April 22, 2024

Johnny Wood

April 17, 2024

Adrian Gore

April 15, 2024

Fatemeh Aminpour, Ilan Katz and Jennifer Skattebol

April 15, 2024