Autonomous weapons are already here. How do we control how they are used?

Image: REUTERS/Rich-Joseph Facun

Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:

International Security

This article is part of the World Economic Forum's Geostrategy platform

The report, Mapping the Development of Autonomy in Weapon Systems, presents the key findings and recommendations from a one-year mapping study on the development of autonomy in weapon systems.

The report aims to help diplomats and members of civil society interested in the issue of lethal autonomous weapons (LAWS) to improve their understanding of the technological foundations of autonomy, and obtain a sense of the speed and trajectory of progress of autonomy in weapon systems. It provides concrete examples that could be used to start delineating the points at which the advance of autonomy in weapons may raise technical, legal, operational and ethical concerns.

The report is also intended to act as a springboard for further investigation into the possible parameters for meaningful human control by setting out some of the lessons learned from how existing weapons with autonomous capabilities are used. In addition, it seeks to help diplomats and members of civil society to identify realistic options for the monitoring and regulation of the development of emerging technologies in the area of LAWS.

What are the technological foundations of autonomy?

The main findings are as follows:

1 Autonomy has many definitions and interpretations, but is generally understood to be the ability of a machine to perform an intended task without human intervention using interaction of its sensors and computer programming with the environment.

2 Autonomy relies on a diverse range of technology but primarily software. The feasibility of autonomy depends on (a) the ability of software developers to formulate an intended task in terms of a mathematical problem and a solution; and (b) the possibility of mapping or modelling the operating environment in advance.

3 Autonomy can be created or improved by machine learning. The use of machine learning in weapon systems is still experimental, as it continues to pose fundamental problems regarding predictability.

What is the state of autonomy in weapon systems?

The report explores the state of autonomy in deployed weapon systems and weapon systems under development. The main findings are as follows:

1.Autonomy is already used to support various capabilities in weapon systems, including mobility, targeting, intelligence, interoperability and health management.

2. Automated target recognition (ATR) systems, the technology that enables weapon systems to acquire targets autonomously, has existed since the 1970s. ATR systems still have limited perceptual and decision-making intelligence. Their performance rapidly deteriorates as operating environments become more cluttered and weather conditions deteriorate.

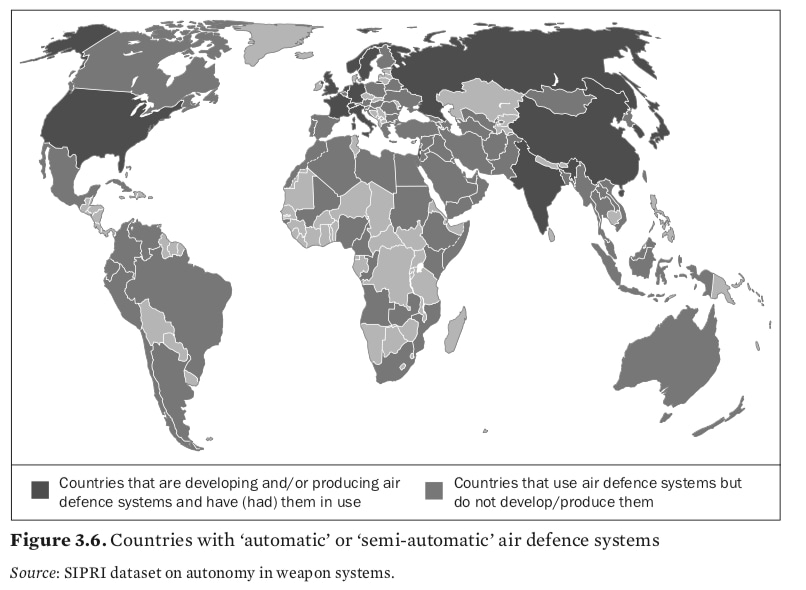

3.Existing weapon systems that can acquire and engage targets autonomously are mostly defensive systems. These are operated under human supervision and are intended to fire autonomously only in situations where the time of engagement is deemed too short for humans to be able to respond.

4.Loitering weapons are the only ‘offensive’ type of weapon system that is known to be capable of acquiring and engaging targets autonomously. The loitering time and geographical areas of deployment, as well as the category of targets they can attack, are determined in advance by humans.

What are the drivers of, and obstacles to, the development of autonomy in weapon systems?

The main drivers identified by the report are:

1. Strategic. The United States recently cited autonomy as a cornerstone of its strategic capability calculations and military modernization plans. This seems to have triggered reactions from other major military powers, notably Russia and China.

2. Operational. Military planners believe that autonomy enables weapon systems to achieve greater speed, accuracy, persistence, reach and coordination on the battlefield.

3. Economic. Autonomy is believed to provide opportunities for reducing the operating costs of weapon systems, specifically through a more efficient use of manpower.

The main obstacles are:

1. Technological. Autonomous systems need to be more adaptive to operate safely and reliably in complex, dynamic and adversarial environments; new validation and verification procedures must be developed for systems that are adaptive or capable of learning.

2. Institutional resistance. Military personnel often lack trust in the safety and reliability of autonomous systems; some military professionals see the development of certain autonomous capabilities as a direct threat to their professional ethos or incompatible with the operational paradigms they are used to.

3. Legal. International law includes a number of obligations that restrict the use of autonomous targeting capabilities. It also requires military command to maintain, in most circumstances, some form of human control or oversight over the weapon system’s behaviour.

4. Normative. There are increasing normative pressures from civil society against the use of autonomy for targeting decisions, which makes the development of autonomous weapon systems a potentially politically sensitive issue for militaries and governments.

5. Economic. There are limits to what can be afforded by national armed forces, and the defence acquisition systems in most arms-producing countries remain ill-suited to the development of autonomy.

Where are the relevant innovations taking place?

The report explores the innovation ecosystems driving the advance of autonomy. It concludes:

1. At the basic science and technology level, advances in machine autonomy derive primarily from research efforts in three disciplines: artificial intelligence (AI), robotics and control theory.

2. The United States is the country that has demonstrated the most visible, articulated and perhaps successful military research and development (R&D) efforts on autonomy. China and the majority of the nine other largest arms-producing countries have identified AI and robotics as important R&D areas. Several of these countries are tentatively following in the US’s footsteps and looking to conduct R&D projects focused on autonomy.

3. The civilian industry leads innovation in autonomous technologies. The most influential players are major information technology companies such as Alphabet (Google), Amazon and Baidu, and large automotive manufacturers (e.g. Toyota) that have moved into the self-driving car business.

4. Traditional arms producers are certainly involved in the development of autonomous technologies but the amount of resources that these companies can allocate to R&D is far less than that mobilized by large commercial entities in the civilian sector. However, the role of defence companies remains crucial, because commercial autonomous technologies can rarely be adopted by the military without modifications and companies in the civilian sector often have little interest in pursuing military contracts.

Recommendations for future discussions

The report concludes with eight recommendations that aim to help the newly formed Group of Governmental Experts on LAWS at the United Nations to find a constructive basis for discussions and potentially achieve tangible progress on some of the key aspects under debate.

1.Discuss the development of ‘autonomy in weapon systems’ rather than autonomous weapons or LAWS as a general category.

2. Shift the focus away from ‘full’ autonomy and explore instead how autonomy transforms human control.

3. Open the scope of investigation beyond the issue of targeting to take into consideration the use of autonomy for collaborative operations (e.g. swarming) and intelligence processing.

4. Demystify the current advances and possible implications of machine learning on the control of autonomy.

5. Use case studies to reconnect the discussion on legality, ethics and meaningful human control with the reality of weapon systems development and weapon use.

6.Facilitate an exchange of experience with the civilian sector, especially the aero-space, automotive and civilian robotics industries, on definitions of autonomy, human control, and validation and verification of autonomous systems.

7. Investigate options to ensure that future efforts to monitor and potentially control the development of lethal applications of autonomy will not inhibit civilian innovation.

8. Investigate the options for preventing the risk of weaponization of civilian technologies by non-state actors.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda Weekly

A weekly update of the most important issues driving the global agenda

You can unsubscribe at any time using the link in our emails. For more details, review our privacy policy.

More on Resilience, Peace and SecuritySee all

Lisa Satolli

April 18, 2024

Kate Whiting

April 4, 2024

Spencer Feingold

March 22, 2024

Weekend reads: Our digital selves, sand motors, global trade's choke points, and humanitarian relief

Gayle Markovitz

March 15, 2024

Kalkidan Lakew Yihun

March 11, 2024

Liam Coleman

March 7, 2024