4 ways to future-proof against deepfakes in 2024 and beyond

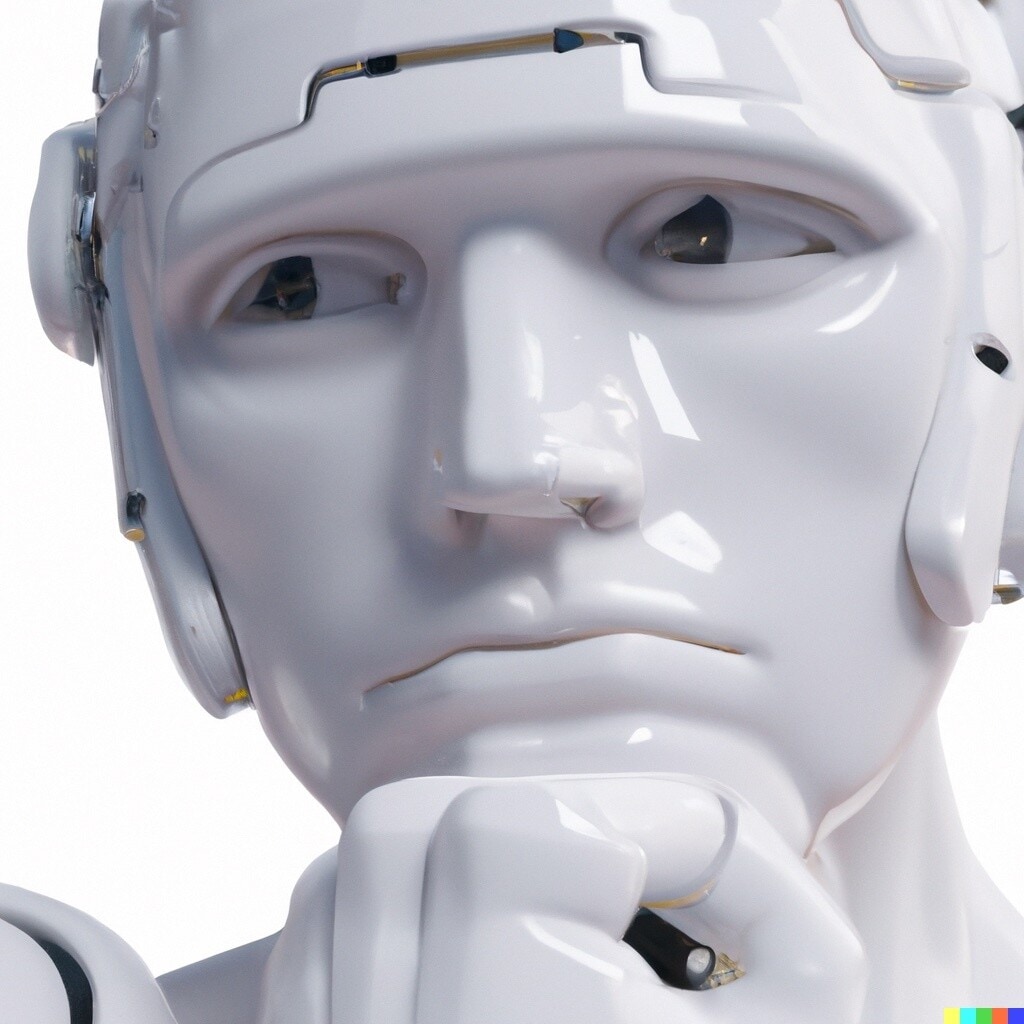

Distinguishing between the authentic and the synthetic will be a key challenge in the battle against deepfakes. Image: Getty Images/iStockphoto

Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:

Cybersecurity

- Disinformation is ranked as a top global risk for 2024, with deepfakes as one of the most worrying uses of AI.

- Deepfakes used by agenda-driven, real-time multi-model AI chatbots and avatars, will allow for highly personalised and effective types of manipulation.

- A zero-trust mindset will become an essential tool to distinguish between what is authentic and what is synthetic in increasingly immersive online environments.

Developments in generative artificial intelligence (genAI) and Large Language Models (LLMs) have led to the emergence of deepfakes. The term “deepfake” is based on the use of deep learning (DL) to recreate new but fake versions of existing images, videos or audio material. These can look so realistic that spotting them as fake can be very challenging for humans.

Facial manipulation methods are particularly interesting because faces are such an important element of human communication. These AI-generated pieces of media can convincingly depict real (and often influential) individuals saying or doing things they never did, resulting in misleading content that can profoundly influence public opinion.

This technology offers great benefits in the entertainment industry, but when abused for political manipulation, the capability of deepfakes to fabricate convincing disinformation, could result in voter abstention, swaying elections, societal polarization, discrediting public figures, or even inciting geopolitical tensions.

The World Economic Forum has ranked disinformation as one of the top risks in 2024. And deepfakes have been ranked as one of the most worrying uses of AI.

With an increased accessibility to genAI tools, today’s deepfake creators do not need technical know-how or deep pockets to generate hyper-realistic synthetic video, audio or image versions of real people. For example, the researcher behind Countercloud used widely available AI tools to generate a fully automated disinformation research project at the cost of less than $400 per month, illustrating how cheap and easy it has become to create disinformation campaigns at scale.

The low cost, ease and scale exacerbate the existing disinformation problem, particularly when used for political manipulation and societal polarisation. Between 8 December 2023 and 8 January 2024, 100+ deepfake video advertisements were identified impersonating the British Prime Minister Rishi Sunak on Meta, many of which elicited emotional responses, for example, by using language such as “people are outraged”.

The potential of deepfakes driving disinformation to disrupt democratic processes, tarnish reputations and incite public unrest cannot be underestimated, particularly given the increasing integration of digital media in political campaigns, news broadcasting and social media platforms.

Future challenges, such as deepfakes used by agenda-driven, real-time multi-model AI chatbots, will allow for highly personalised and effective types of manipulation, exacerbating the risks we face today. The decentralised nature of the internet, variations in international privacy laws, and the constant evolution of AI mean that it is very difficult, if not impossible, to impede the use of malicious deepfakes.

Countermeasures

1. Technology

Multiple technology-based detection systems already exist today. Using machine learning, neural networks and forensic analysis, these systems can analyze digital content for inconsistencies typically associated with deepfakes. Forensic methods that examine facial manipulation can be used to verify the authenticity of a piece of content. However, creating and maintaining automated detection tools performing inline and real-time analysis remains a challenge. But given time and wide adoption, AI-based detection measures will have a beneficial impact on combatting deepfakes.

2. Policy efforts

According to Charmaine Ng, member of the WEF Global Future Council on Technology Policy: “We need international and multistakeholder efforts that explore actionable, workable and implementable solutions to the global problem of deepfakes. In Europe, through the proposed AI Act, and in the US through the Executive Order on AI, governments are attempting to introduce a level of accountability and trust in the AI value system by signaling to other users the authenticity or otherwise of the content.

They are requiring online platforms to detect and label content generated by AI, and for developers of genAI to build safeguards that prevent malicious actors from using the technology to create deepfakes. Work is already underway to achieve international consensus on responsible AI, and demarcate clear redlines, and we need to build on this momentum.”

Mandating genAI and LLM providers to embed traceability and watermarks into the deepfake creation processes before distribution could provide a level of accountability and signal whether content is synthetic or not. However, malicious actors may circumvent this by using jailbroken versions or creating their own non-compliant tools. International consensus on ethical standards, definitions of acceptable use, and classifications of what constitutes a malicious deepfake are needed to create a unified front against the misuse of such technology.

3. Public awareness

Public awareness and media literacy are pivotal countermeasures against AI-empowered social engineering and manipulation attacks. Starting from early education, individuals should be equipped with the skills to identify real from fabricated content, understand how deepfakes are distributed, and the psychological and social engineering tactics used by malicious actors. Media literacy programmes must prioritize critical thinking and equip people with the tools to verify the information they consume. Research has shown that media literacy is a powerful skill that has the potential to protect society against AI-powered disinformation, by reducing a person’s willingness to share deepfakes.

4. Zero-trust mindset

In cybersecurity, the “zero-trust” approach means not trusting anything by default and instead verifying everything. When applied to humans consuming information online, it calls for a healthy dose of scepticism and constant verification. This mindset aligns with mindfulness practices that encourage individuals to pause before reacting to emotionally triggering content and engage with digital content intentionally and thoughtfully. Fostering a culture of zero-trust mindset through cybersecurity mindfulness programs (CMP) helps to equip users to deal with deepfake and other AI-powered cyberthreats that are difficult to defend against with technology alone.

As we increasingly live our lives online and with the metaverse imminently becoming a reality, a zero-trust mindset becomes even more pertinent. In these immersive environments, distinguishing between what is real and what is synthetic will be critical.

How is the World Economic Forum creating guardrails for Artificial Intelligence?

There is no silver bullet approach in effectively mitigating the threat of deepfakes in digital spaces. Rather, a multilayered approach consisting of a combination of technological and regulative means, along with heightened public awareness, is necessary. This requires global collaboration among nations, organizations and civil society, as well as significant political will.

Meanwhile, the zero-trust mindset will encourage a proactive stance towards cybersecurity, compelling both individuals and organizations to remain ever-vigilant in the face of digital deception, as the boundaries between the physical and virtual worlds continue to blur.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda Weekly

A weekly update of the most important issues driving the global agenda

You can unsubscribe at any time using the link in our emails. For more details, review our privacy policy.

More on Emerging TechnologiesSee all

James Fell

April 26, 2024

Alok Medikepura Anil and Uwaidh Al Harethi

April 26, 2024

Thomas Beckley and Ross Genovese

April 25, 2024

Robin Pomeroy

April 25, 2024

Beena Ammanath

April 25, 2024

Muath Alduhishy

April 25, 2024