AI isn’t dangerous, but human bias is

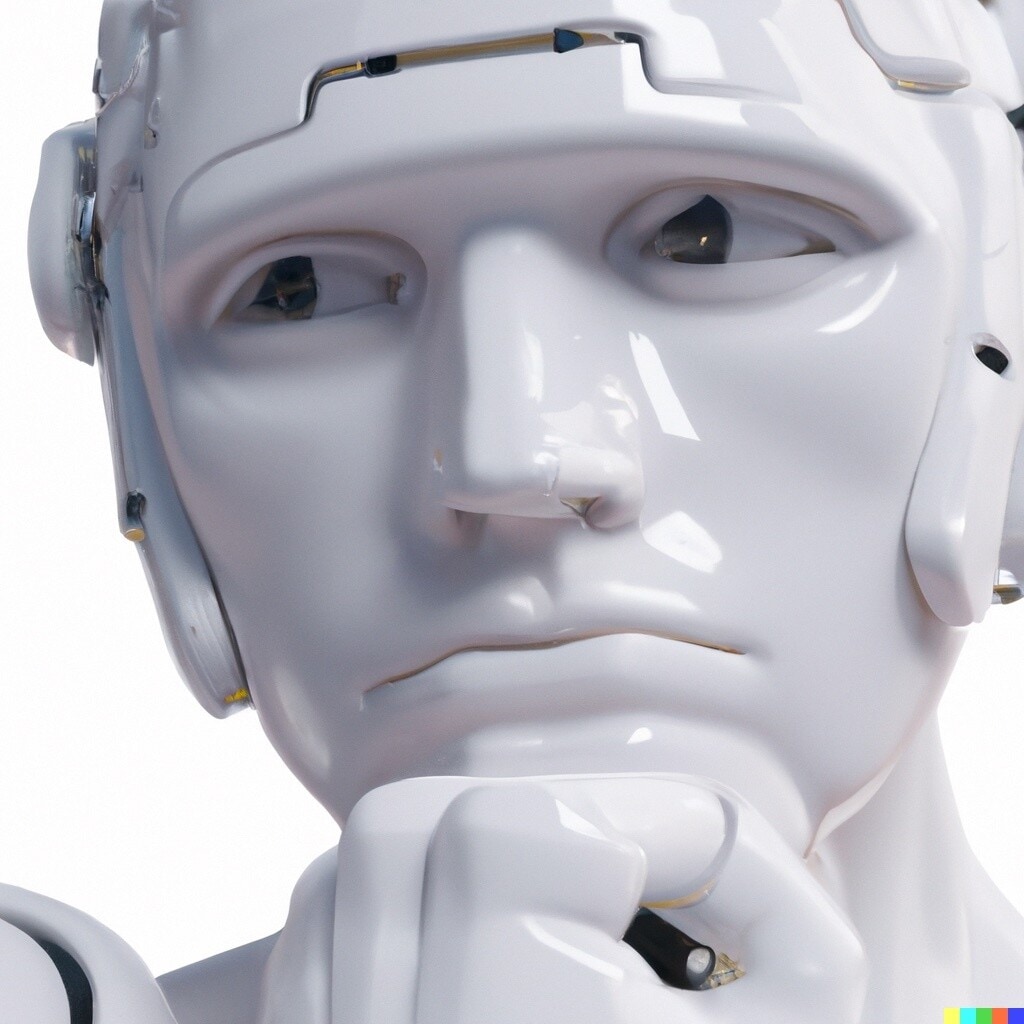

AI holds a mirror up to humanity, we must ensure it shows our best face. Image: REUTERS/Bobby Yip

Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:

Emerging Technologies

AI is rapidly becoming part of the fabric of our daily lives as it moves out of academia and research labs and into the real world.

I'm not concerned about AI superintelligence “going rogue” and challenging the survival of the human race – that's science fiction and unsupported by any scientific research today. But I do believe we have to think about any unintended consequences of using this technology.

Kathy Baxter, Salesforce’s ethical AI Practice architect, put the problem well. Pointing out that AI is not sentient but merely a tool and therefore morally neutral, she reminded us that its use depends on the criteria we humans apply to its development. “While AI has the potential to do tremendous good, it can also have the potential for unknowingly harming individuals.”

Unmanaged AI is a mirror for human bias

One way that AI can cause harm is when algorithms reflect our human biases in the datasets that organizations collect. The effects of these biases can compound in the AI era, as the algorithms themselves continue to “learn” from the data.

Let’s imagine, for example, that a bank wants to predict whether it should give someone a loan. Let’s also imagine that in the past, this particular bank hasn’t given as many loans to women or people from certain minorities.

These features will be present in that bank’s dataset – which could make it easier for an AI algorithm to draw the conclusion that women or people from minority groups are more likely to be credit risks and should therefore not be given loans.

In other words, the lack of data on loans to certain people in the past could have an impact on how the bank’s AI program will treat their loan applications in the future. The system could pick up a bias and amplify it – or at the very least, perpetuate it.

Of course, AI algorithms could also be gamed by explicit prejudice, where someone curates data in an AI system in a way that excludes, say, women of colour being considered for loans.

Either way, AI is only as good as the data – specifically, the “training data” – we give it. All this means it’s vital for anyone involved with training AI programs to consider just how representative any training data they use actually is.

As Kathy put it, by simply plucking data from the internet to train AI programmes there’s a good chance that we will “magnify the stereotypes, the biases, and the false information that already exist”.

Embedding diversity and removing bias

So how do we manage the threats of biased AI? It starts with humans proactively identifying and managing any such bias – which includes training AI systems to identify it.

AI bias isn’t the result of the technology being flawed. Algorithms don’t become biased on their own – they learn that from us. So we have to take responsibility for helping to avoid any negative effects spawned from the AI systems that we’ve created. For example, a bank could exclude gender and race from its dataset when setting up an AI system to score the viability of applicants for loans.

At the same time, companies also need to be aware of hidden biases. Say our imaginary bank removed gender and race from its AI model, but left in zip codes – often a proxy for race in the US. Such a move could still lead to the bank’s algorithms producing biased predictions that discriminated against historically underprivileged neighborhoods.

To prevent AI systems from allowing bias to influence recommendations in any way at all, we need to rely on human understanding, and not just technology.

Core to this is making sure that the people who are building AI systems reflect a diverse set of perspectives. A homogenous group may only hear opinions that match their own – they’re limited to what they know, in other words, and are less likely to spot where bias might be present.

With such guidance, organizations can curate bias out of their training data to mitigate negative effects. This can also help to make AI systems more transparent and less inscrutable, which in turn makes it easier for companies to check for any errors that might be taking place.

Taking ethical AI to the next level

The good news is that I’m seeing efforts to tackle bias currently moving in tandem with a broader global debate about AI ethics. That’s being led by groups such as the Partnership on AI, a consortium made up of technology and education leaders, including Salesforce, from varied fields.

These groups are joining forces to focus on the responsible use and development of AI technologies. They’re doing so by advancing the understanding of AI, establishing best practice, and harnessing AI to contribute to solutions for some of humanity’s most challenging problems.

Taking these debates to a wider stage is important because any process that involves the responsible use of AI should not be seen as simply another set of tasks. Instead, we need to fundamentally change people’s thinking and behaviour, and we need to do it now.

To put it another way, if AI does hold a mirror to humanity, it’s up to us to be accountable, now, and ensure what’s reflected shows our best face.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda Weekly

A weekly update of the most important issues driving the global agenda

You can unsubscribe at any time using the link in our emails. For more details, review our privacy policy.

More on Emerging TechnologiesSee all

James Fell

April 26, 2024

Alok Medikepura Anil and Uwaidh Al Harethi

April 26, 2024

Thomas Beckley and Ross Genovese

April 25, 2024

Robin Pomeroy

April 25, 2024

Beena Ammanath

April 25, 2024

Vincenzo Ventricelli

April 25, 2024