AI in healthcare: Buckle up for change, but read this before takeoff

AI in healthcare holds great potential Image: Philips

Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:

Health and Healthcare

- There’s both excitement and uncertainty about what artificial intelligence (AI) could ultimately mean for healthcare and people everywhere.

- We should embrace AI and unlock the immediate benefits but take considered steps to limit the risks.

- An industry-wide framework can help responsibly deliver on the full promise of AI, with people at the centre, while mitigating risks.

Imagine you’re tasked with urgently improving healthcare for people everywhere, and artificial intelligence (AI) promises to make a significant difference on a scale not seen in our lifetime. With the three options presented below, you can either:

1. Try to halt the development of AI until the risks and benefits are fully understood.

2. Go blazing into the unknown, deploying AI at every turn and dealing with issues as they arise.

3. Or fully embrace AI but take considered steps to limit the risks while unlocking the immediate benefits.

Which option would you choose?

The options we have at hand are more nuanced, of course. But broadly speaking, these are the approaches we often hear when listening to different voices on AI today. The mixed views are partly due to the excitement and uncertainty about what AI could ultimately mean for healthcare and people everywhere. This excitement and uncertainty has only grown in the last 12 months as AI developments have seemingly accelerated from week to week.

That’s why, as co-chairs of the US National Academy of Medicine’s initiative to develop a Code of Conduct for AI in health, healthcare and biomedical science, and as strong proponents of option three, we’re eager to build a greater understanding of how we can all best navigate the road ahead. This includes embracing the power of AI in a responsible, equitable and ethical manner.

The best way to start, in our view, is to address both the opportunities and the risks.

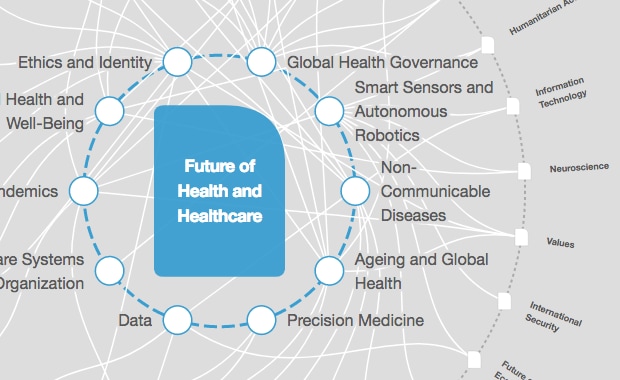

What is the World Economic Forum doing to improve healthcare systems?

The challenges facing healthcare and how AI can help

We are doing amazing things in health, healthcare and biomedical science to help people live better, healthier lives. Thanks to data with rich, actionable insights, we can often offer earlier diagnoses, better treatments and even cures for patients.

Simultaneously, many healthcare organizations are struggling to consistently deliver high-quality care. There are more patients to look after and significant pockets of staff shortages, with doctors and nurses burdened by increasing clerical demands and complexity. Costs are rising too. Although many of us have received excellent care and great treatment, others struggle to access the care they need, especially people who live in under-resourced areas of the world, but also, increasingly, people living in wealthy urban areas. We believe this crisis will only worsen unless we urgently deploy solutions that make a difference.

AI can help, and it already has. Generative AI tools and chatbots, for example, are cutting the time doctors and nurses spend on paperwork. Every day, we learn more about the potential of AI to improve healthcare. One recent study by the UK’s Royal Marsden NHS Foundation Trust and the Institute of Cancer Research showed that AI was “almost twice as accurate as a biopsy at judging the aggressiveness of some cancers.” This translates into different treatments and, ultimately, more lives saved. To learn about other developments on the horizon, consider some of the many predictions for what’s in store for 2024, from accelerating drug discovery to boosting personalized medicine and patient engagement.

These developments are incredibly promising. We truly believe AI will revolutionize healthcare delivery, generate groundbreaking advances in health research and contribute to better health for all. But we must get it right.

Our collective responsibility to get this right

For all the excitement around AI in healthcare, the concerns are legitimate and must be addressed. AI algorithms, for example, can exacerbate biases in healthcare. This can happen when the data used to train algorithms reflect and amplify the inequalities existing in healthcare and society today. Another major responsibility is protecting patient data, which is sacrosanct to the doctor-patient relationship.

Due to the nature of these concerns, the European Union, the United States, the United Kingdom, China and others are racing to respond, “but the technology is evolving more rapidly than their policies.”

We can also point to examples where organizations have taken the initiative to help ensure AI is applied accurately, safely, reliably, equitably and ethically – including in our own organizations. Mayo Clinic Platform, Validate, for example, provides a bias, specificity and sensitivity report for AI models so developers can ensure their model is accurate and unbiased and clinicians can trust that an independent third-party source evaluated the models. Philips’ AI principles guide the ethical use of AI, focusing on well-being, oversight, robustness, fairness and transparency. Likewise, Google’s AI principles are built on the company’s “commitment to developing technology responsibly and work to establish specific application areas we will not pursue.”

In addition to these efforts, we are working towards an industry-wide framework. Given the increasing use of AI in healthcare and biomedical science – and the concerns about risks and potential harm – careful review, application and continual development of best practices are essential to maximize benefits and mitigate harm. The challenges are complex and impact a range of stakeholders, including central agencies; public and private national, state and local organizations and professional societies; and, ultimately, the individuals whose data provide the basis of the AI models and who are subject to their impact.

That’s why the US National Academy of Medicine’s AI Code of Conduct initiative aims to provide a framework to ensure that AI algorithms and their application in health, healthcare and biomedical science perform accurately, safely, reliably, equitably and ethically in the service of better health for all. This is about designing a framework to responsibly deliver on the full promise of AI, with people at the centre.

The goals are to:

(1) Harmonize the many sets of AI principles/frameworks/blueprints for healthcare and biomedical science. Identify and fill the gaps to create a best practice AI Code of Conduct with ‘guideline interoperability.’

(2) Align the field in advancing broad adoption and embedding of the harmonized AI Code of Conduct.

(3) Identify the roles and responsibilities of each stakeholder at each stage of the AI lifecycle.

(4) Describe the architecture needed to support responsible AI in healthcare.

(5) identify ways to increase the speed of learning about how to govern AI in healthcare in service of a learning health system.

To achieve these goals and realize the full benefits of AI in healthcare while mitigating risks, it will take cross-sector collaboration and coalition building every step of the way – because we have a collective industry-wide responsibility to get this right.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda Weekly

A weekly update of the most important issues driving the global agenda

You can unsubscribe at any time using the link in our emails. For more details, review our privacy policy.

More on Health and Healthcare SystemsSee all

Anna Cecilia Frellsen

May 9, 2024

Angeli Mehta

May 8, 2024

Emma Charlton

May 8, 2024

Kate Whiting

May 3, 2024

Kiran Mazumdar-Shaw

May 2, 2024