How to create a trustworthy COVID-19 tracking technology

Are privacy risks worth a quicker end to lockdowns? Image: REUTERS/Antonio Parrinello - RC2ZKF9GSWSH

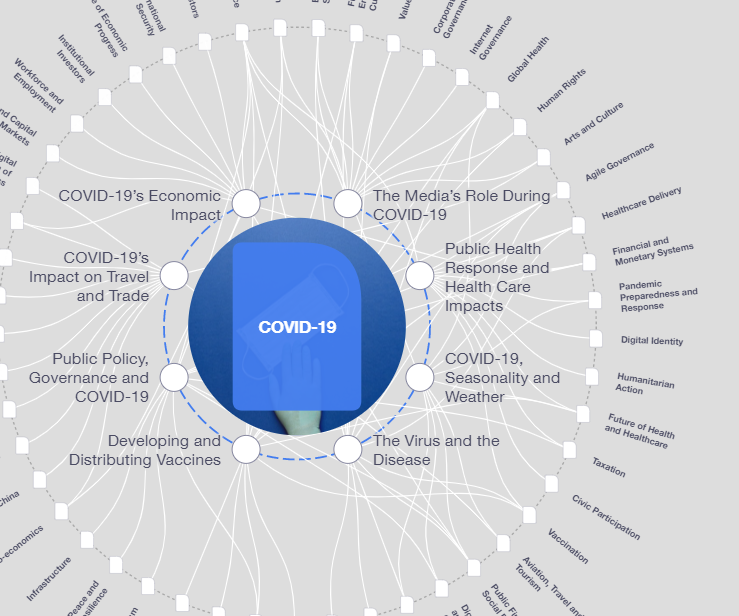

Explore and monitor how COVID-19 is affecting economies, industries and global issues

Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:

COVID-19

- Tracking technologies could help monitor the spread of COVID-19.

- Yet they also raise questions about privacy and data management.

- Here are four ways to ensure the responsible use of these technologies, including ensuring independent oversight.

In the fight against COVID-19, tech governance is at a clear crossroad. Either it will be part of the recovery debate, leading to the emergence of public-private global cooperation for a trustworthy use of technology. Or we might observe a dramatic surrender of public liberties in the name of using surveillance technologies in the continuous battle against coronavirus and potential novel viruses.

Many tech companies and at least 30 governments have started proposing or deploying tracking tools. Privacy International has been collecting these initiatives, showing the wide range of approaches in terms of privacy and respect of freedoms. The lack of a shared governance framework is manifest.

What is the World Economic Forum doing about the coronavirus outbreak?

Tracking technologies using smartphones could help monitor the evolution of the virus among the population and quickly prevent new clusters of confirmed cases from building up, helping countries emerge from lockdowns. But they also bring dauting privacy risks associated with collecting health data from citizens and tracking movements. These tools may lead to an unprecedent infringement of our freedoms, unless governments and tech companies turn to robust governance frameworks and rely on true cooperation.

“There may be no turning back if Pandora’s box is opened,” noted Irakli Beridze, Head of the Centre for Artificial Intelligence and Robotics for the United Nations Interregional Crime and Justice Research Institute (UNICRI), in a recent article.

How can we navigate the gray zone of ending lockdowns while avoiding any infringement of freedom?

The World Economic Forum’s Centre for the Fourth Industrial Revolution recently launched a pilot project in France to test a governance framework for the responsible use of facial recognition. This work suggests four lessons to ensure the responsible use of technologies in a post-COVID-19 world.

1. Lean on a multistakeholder approach

COVID-19 allows tech companies to take a new lead on health or security infrastructures, domains historically occupied by governments. A multistakholder approach could help balance and coordinate the roles of the public and the private sectors and put the rights of the citizen first – at the local, regional and global levels.

2. Clearly identify potential limitations

It is critical to agree on what the objectives and limits of tracking technologies should be. For example, the Berkman Klein Center of Harvard University has built a tool to list many of the initiatives to ensure ethical approaches to AI. Policymakers and tech companies must examine nine main principles, asking questions that will help inform essential requirements for any tools.

- Bias and discrimination: Are there any bias or risk of discrimination in the tool, and if so, how can they be mitigated?

- Proportionality: What are the trade-offs brought by the tool?

- Privacy: Is the tool respecting “privacy by design” rules that ensure privacy is a priority?

- Accountability: What kind of accountability model is in place for external audits?

- Risk prevention: What is the risk-mitigation process in case something goes wrong?

- Performance: What is the performance and accuracy of the system and how is it assessed?

- Right to information: Are users well informed about data sharing?

- Consent: How do you ensure that users provide an informed, explicit and free consent?

- Accessibility: Is the tool accessible to anyone, including people with disabilities?

3. Ensure continuous internal evaluation

Once the principles are established, the next step is to ensure that the system meets the requirements. One way to do so is to draft a list of questions to guide the product team to internally check its compliance. This questionnaire helps bridge the gap between political principles and technical requirements.

For example, IBM Research has a framework called “FactSheets” to increase trust in AI services, Google has recently proposed a framework for internal algorithmic auditing, and Microsoft a list of AI Principles and the tools to apply them internally. These promising projects could serve as best practices for other tech companies.

4. Identify an independent third party for oversight

In addition to the internal questionnaire, there must also be external oversight of systems through an independent third party. Trust depends on the ability of this independent body to have access to the system to conduct verifications.

Recent history shows that the need for audits or audit reforms surges in the aftermath of crises. For example, the 2002 Sarbanes-Oxley Act that created new regulations to respond to fraud in financial services emerged after the failure of Enron. In the aerospace industry, the recent Boeing 737MAX accidents may lead to a new series of stronger controls.

As of now, tech companies have largely avoided any external audit, even when failures to protect citizens have been reported and have led to scandals. For example, consider the case of clearview.ai, a facial recognition tool working on a never-seen database of more than three billion images scraped from the Internet, including social media applications and millions of other websites. This startup has not only raised privacy concerns but also been reportedly exposed to a recent data breach. What is true for traditional industries should be true for the tech sector as well.

We know that tech governance is key to mitigating the potential risks brought by the Fourth Industrial Revolution. The COVID-19 crisis represents its truth test. We must act now to build frameworks that can help ensure any tracking tools not only aid in the recovery but also protect the rights of users.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda Weekly

A weekly update of the most important issues driving the global agenda

You can unsubscribe at any time using the link in our emails. For more details, review our privacy policy.

More on Health and Healthcare SystemsSee all

Katherine Klemperer and Anthony McDonnell

April 25, 2024

Vincenzo Ventricelli

April 25, 2024

Shyam Bishen

April 24, 2024

Shyam Bishen and Annika Green

April 22, 2024

Johnny Wood

April 17, 2024