Why we need to make safety the product to build better bots

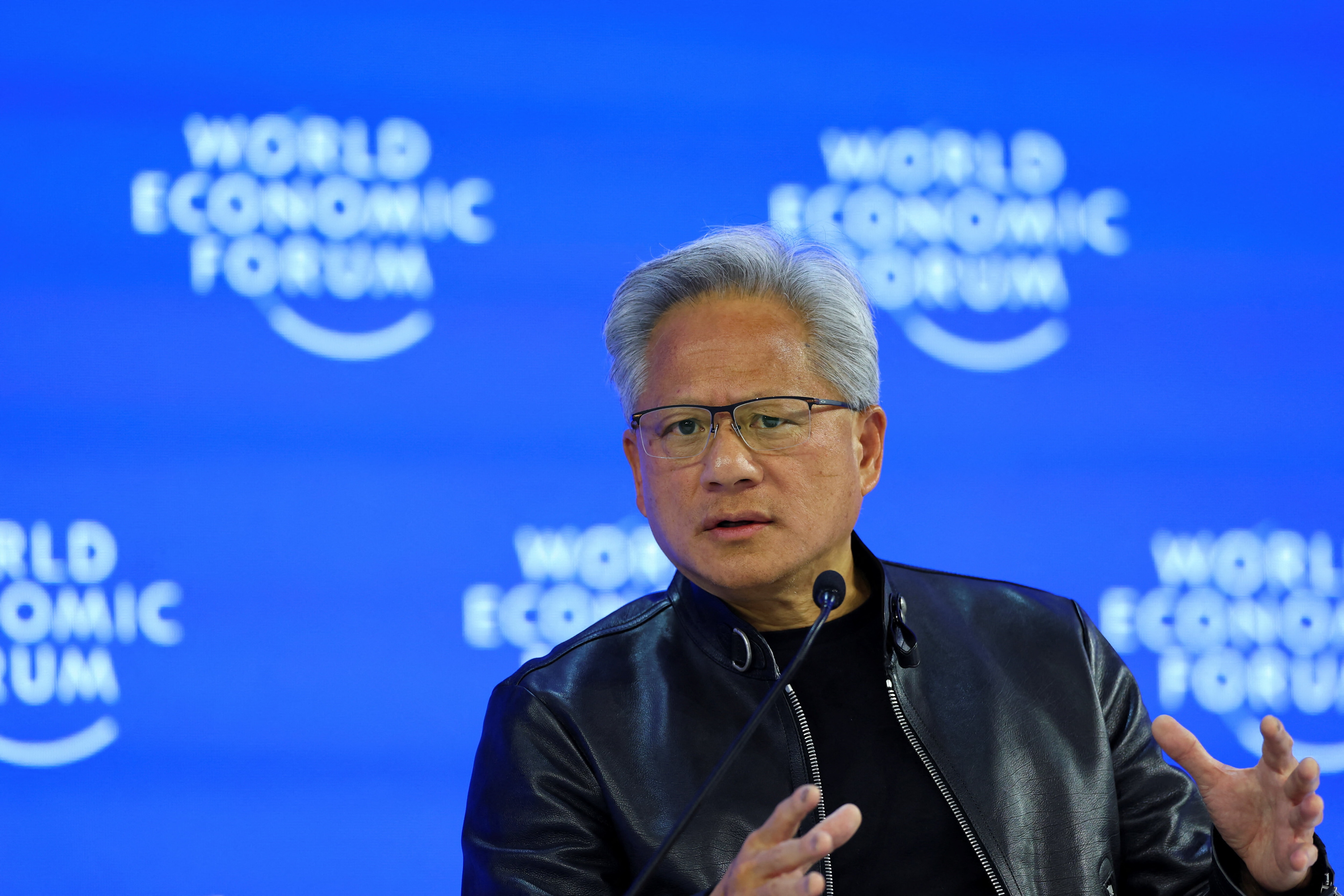

The path to safe, trustworthy and widely deployed bots requires multistakeholder collaboration. Image: DC Studio/Freepik

- The AI safety debate often overlooks risks to end users by focusing too much on foundational models and too little on deployed products.

- The path to safe GenAI consumer products requires the fields of responsible AI and digital trust and safety to learn from each other.

- By applying lessons of trust and safety and rigorous responsible AI governance, we can build innovative and safe bots for the future.

The current discourse on artificial intelligence (AI) safeguards suffers from an alignment problem: it often confuses the safety concerns in foundational models with those of the products end-users interact with daily.

Recent leaps in general AI capabilities – such as the launch of GPT-5 and its deployment in ChatGPT – have captured global attention. This focus risks distracting practitioners and policy-makers alike from a collaborative approach, where tried-and-true trust and safety methods are enhanced through the use of artificial intelligence to ensure that generative AI (GenAI) products are as safe as possible for the people who use them.

The path to safe, trustworthy and widely deployed chatbots and other generative AI consumer products requires the often-siloed fields of responsible artificial intelligence and digital trust and safety to learn from each other – and from proven approaches to managing risk across different parts of value and supply chains.

Model safety isn’t enough

From policy-makers to philanthropists, and from activists to executives, the conversation around artificial intelligence safety has largely focused on ensuring that general-purpose AI models are generically safe, whether from existential risks to humanity or from everyday concerns about fairness and threats to vulnerable communities.

But dwelling on models alone overlooks an equally urgent dimension: how generative AI products affect real consumers today. This is an area where online consumer businesses – from shopping to gaming – have focused, with an increasingly sophisticated safety toolbox, for years.

The most pressing AI risks are not hypothetical. Allegations of chatbots encouraging self-harm and the industrial creation of non-consensual intimate imagery and child sexual abuse material – underscore that end-user safety must be paramount.

Despite the overlap between artificial intelligence labs and the companies making traditional digital products, we need more collaboration between the fields of responsible AI and trust and safety.

On the artificial intelligence side of things, we have robust risk repositories, research collaborations and an ecosystem of AI governance efforts that have generated public debate, elevating understanding of existential and societal risks from misuse of general purpose AI.

Sorting out responsibility for safety within the complicated AI supply chain is challenging, as the roles of infrastructure providers, model developers, model deployers and end users are often blended and blurred. Developers of frontier models, for example, also operate infrastructure, deploy products for consumers and businesses, and use these tools themselves, such as for content moderation.

That is why putting people first is the right starting point. By focusing on how people interact with AI products, we can apply proven methods for assessing risks and mitigating harms, without reinventing the wheel.

Lessons from digital safety

Here too is where the advancements made in the past several decades by trust and safety practitioners come into play. From international standards to dedicated forums for collaboration on the toughest challenges, the deployers of AI products and services can benefit from the hard-won lessons of trust and safety.

For example, social media and other digital services have spent decades wrestling with how to mitigate the spread of harmful content while also upholding the rights of users to access information. The results may look different for chatbots, but the calculation for assessing risk and considering tradeoffs when rolling out new features is the same.

The accelerating integration of AI into existing digital services means that for many people, their first encounter with generative AI will be within familiar products – search, productivity tools, or social media.

The regulatory lens on bots

This too, is how regulators are considering artificial intelligence as part of online safety laws like the EU’s Digital Services Act. Reportedly, the European Commission will soon designate the search component of ChatGPT as a “very large online search engine”, subjecting the service to heightened due diligence and transparency requirements.

Plus, guidelines published by the Commission on elections and the protection of minors have identified risks from AI tools and AI-generated images as a particular priority.

The interaction between artificial intelligence products and other digital services also generates novel risks, such as the archiving of AI chats by search engines, which will require cross-industry collaboration on solutions.

Encouragingly, some AI companies are joining established bodies, such as Anthropic recently joining the Global Internet Forum to Counter Terrorism. But broader participation from AI deployers in industry and multistakeholder collaborations is needed.

A transformative opportunity for bots

Every innovative sector tends to view its challenges as uniquely complex. But while the risks may be new, the practices that can address them are not. Better user experiences that can unlock widespread GenAI adoption will come from:

- Industry collaboration on integrating safety-by-design in product development

- Thoughtful governance that is explainable and regularly updated

- Enforcement that blends human and AI capabilities

- Dedication to continuous improvement and creative approaches to appropriate transparency

The good news is that at the working level, artificial intelligence and trust and safety practitioners are deeply engaged with each other on these efforts. The quantity and quality of AI-related sessions at the tentpole trust and safety conference TrustCon speaks to this collaboration.

And the potential for well-aligned AI to help transform trust and safety is real. Companies looking to avoid the mistakes of the past can put their tech to work not to replace trust and safety, but to enhance their capabilities. To meet the moment, partnerships between AI deployers and the wider digital ecosystem must be formalized and scaled.

If we focus on human end users – applying both the lessons of trust and safety and rigorous responsible AI governance, the chatbots of today and tomorrow can be both innovative and safe.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Cybersecurity

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Emerging TechnologiesSee all

Yousif Yahya and Abir Ibrahim

January 29, 2026