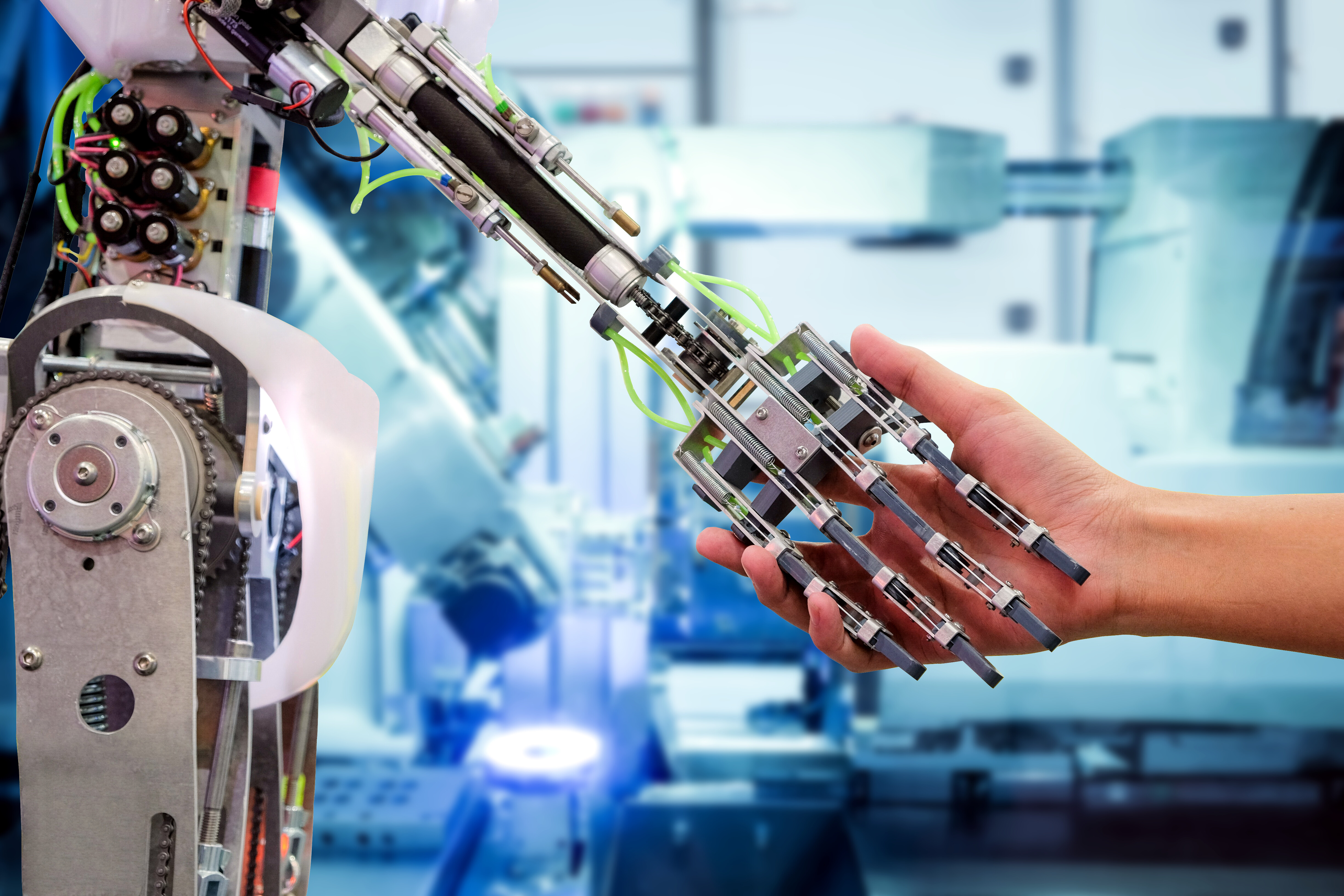

Human-robot interaction is coming. Are we ready for it?

We need to consider the risks involved in human-robot interaction. Image: Getty Images/iStockphoto

- The risks we face when interacting with robots differ from those in human-to-human relationships – something which needs to prompt reflection.

- Society needs clear definitions of concepts around robots, as acceptance of human-robot interaction varies widely across different cultures.

- We also need to reflect on the ethics and the risks involved in the convergence of social robots and potential AI agents with ‘moral agency’.

Many of us treasure memories of time spent with family and friends, with these memories shaping our own personalities and our resilience to what the world confronts us with. We value these relationships precisely because we took the risk to allow others into our personal spaces and intimate thoughts.

Today, we are challenged to consider how the risks we face when interacting with robots, differ from those in human-to-human relationships. How might interacting with robots change our memories? And specifically, how might the role we give to robots affect our personal growth and how we navigate the world?

Here, I consider some aspects of how the risk we take in personal relationships changes in human-robot interaction, given the differences between these two kinds of interaction. These differences include, for instance, new nuances of deception and the non-reciprocity of the interaction.

The focus is not on listing these differences in detail, but on highlighting some of the main risks and concerns they raise.

The need for clear definitions of robots

The first key observation I offer is that our reflection should be informed by clear definitions of core concepts. This is necessary, among other reasons, because acceptance of human-robot interaction varies widely across different cultures and, most importantly, because such interaction challenges our very understanding of what it means to be human.

Here, “human-robot interaction” is interpreted in the context of social robots, not industrial ones. Social robots are understood as physical machines that are autonomous (or semi-autonomous) and that interact and communicate with humans through mimicking human behaviour and communication.

They can be deployed in different contexts, including education, childcare, healthcare or companionship, with the understanding that this interaction always includes some form of interpersonal communication.

A core set of social robots are so-called humanoid robots, which are robots that are designed to look like humans. This raises questions of ethics as well as trust in the technology. In some use cases, such as care robots for patients with mental health challenges and companion – specifically sex – robots, there is a real risk of human rights violations.

Furthermore, anthropomorphizing robots, that is, deliberately designing robots with whom humans will have empathy, raises the concern that while ‘physically pleasant’ robots potentially have a higher probability to achieve their goals in a social context, simply by virtue of their appearance, their use and interaction with them have potential manipulative implications.

When robots take moral decisions

The second key observation is we need to flag the risks involved in the convergence of social robots and potential AI agents with ‘moral agency’.

The debate in machine ethics is not only whether AI systems should be able to make decisions in morally sensitive contexts, but also whether they should be allowed to generate value judgements that may lead to direct action, as in the case of care robots for the elderly.

Various concerns from ethics and human rights perspectives arise in these debates. These range from risks to respecting and promoting human dignity, to concerns about transparency and accountability.

A related set of problems arise on ascribing some form of personal identity to social robots, especially in the context of companion robots. One concern in this context relates to the risk of insufficient protection of privacy.

Should a social robot with machine learning capability store the data generated from its interaction with ‘their’ human, in such a way that third party sharing is enabled or in such a way that the robot develops an ‘identity’? These concerns will likely grow as robotics converges with more agentic AI.

What it means to be human

The third key point is the very notion of what it means to be human.

Will social interaction with robots impact how humans define themselves? Will this be a good or a bad thing? Might it ultimately contribute to the ‘gentle singularity’ – the notion that the quality of human life and human productivity will be vastly improved by the scientific progress enabled through AI – that OpenAI CEO Sam Altman recently wrote of?

A related set of questions centre on whether – and why, or why not – we should protect what we currently understand of what it means to be human at all. These questions point to deep and centuries old philosophical problems that have never been as pertinent to address as now.

Reflection needed to ensure humans thrive

Reflecting on how the risks of human-to-human interaction differ from those of human-robot interaction is essential if humans are to thrive alongside emerging technologies such as robotics and AI.

While we may be far away from a global uptake of social robots due to both current technical and economic constraints, by now we should have learned the lesson that responsible engagement with emerging technology is not simply about ensuring that policy making keeps up with its advancement. It is also about ensuring bottom-up, robust and forward-looking engagement with the technology. This, in turn, will automatically inform its regulation and governance.

Understanding and grappling with the risks posed by human-robot interaction is the first step towards such bottom-up engagement.

How the Forum helps leaders make sense of AI and collaborate on responsible innovation

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Cybersecurity

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Emerging TechnologiesSee all

Yousif Yahya and Abir Ibrahim

January 29, 2026