Why governance is the new infrastructure for physical AI

Embodied systems have begun shifting from a research timeline to an industrial one. Image: Unsplash/Homa Appliances

- Artificial intelligence is transitioning from screen-based tools to physical systems operating within the real economy.

- Demographic shifts and labour shortages are accelerating the adoption of AI to maintain industrial continuity.

- Robust governance frameworks are essential to manage operational risks and ensure safety in physical environments.

The mid-2020s may be remembered as the period when artificial intelligence (AI) ceased to be primarily a screen-based productivity tool and began operating as a physical system in the real economy. What changed was not only model capability, but the speed at which physical AI moved from laboratory demonstrations to field pilots and early commercial deployment.

In early 2026, the signal has become unmistakable. Embodied systems have begun shifting from a research timeline to an industrial one, with digital twin, simulation and synthetic environments, compressing iteration cycles before deployment. At major global technology exhibitions, humanoid platforms are framed explicitly for real-world work. At the same time, the adoption engine matured, with skills increasingly packaged, shared and deployed through ecosystems supported by simulation platforms and scalable data pipelines.

When AI becomes embodied, governance becomes infrastructure.

This is why AI governance — rather than raw technical capability — has become the decisive factor in physical industries. As physical AI systems can be updated, distributed and effectively “downloaded” at increasing speed, operational risk can scale faster than organizational structures adapt. In physical environments, failures cannot simply be patched after the fact. Once AI begins to move goods, coordinate labour or operate equipment, the binding constraint shifts from what systems can do to how responsibility, authority and intervention are governed.

Physical industries are governed by consequences, not computation

Physical industries are governed by consequences, not merely computation. Once AI operates in physical space, errors are no longer abstract or reversible. They materialize as operational disruption and safety risk, often extending beyond the immediate point of failure.

Digital-first sectors can often fail gracefully. A flawed recommendation can be rolled back, tested or corrected in software. Physical operations rarely afford such flexibility. Operations pause when a robot drops a part during handover or loses balance while navigating a factory floor designed for humans. In these environments, AI does not merely optimize processes; it reallocates risk across people, assets and partner organizations.

The implication is structural. In physical industries, the question is not whether AI systems are accurate on average, but whether responsibility, authority and intervention are clearly governed at the moment of failure. Without such AI governance, scale amplifies fragility: the faster systems are deployed, the faster unmanaged risk spreads.

Necessity is the accelerant of physical AI adoption

Necessity, not technology, is the primary accelerant of physical AI adoption. Across advanced economies, demand for movement, production and maintenance continues to rise, while the supply of labour increasingly fails to keep pace. Under these conditions, physical AI become continuity mechanisms rather than optional productivity tools.

This pressure is not confined to any single region. The OECD Employment Outlook indicates that working-age populations across advanced economies will stagnate or decline over the coming decades as ageing accelerates and participation tightens. Labour constraints are therefore shared structural conditions shaping industrial strategy, not a temporary anomaly.

Some economies are encountering this reality earlier than others. In parts of East Asia, demographic ageing, declining fertility and tightening labour markets are already influencing automation choices in logistics, manufacturing and infrastructure. These environments are not exceptional; they are simply ahead of a trajectory other advanced economies are likely to follow. As simulation and ecosystem-based learning shorten deployment cycles, the strategic question shifts from “Can we adopt AI?” to “Can we govern AI at scale?” In necessity-driven contexts, governance determines whether automation expands capacity — or merely introduces new forms of systemic fragility.

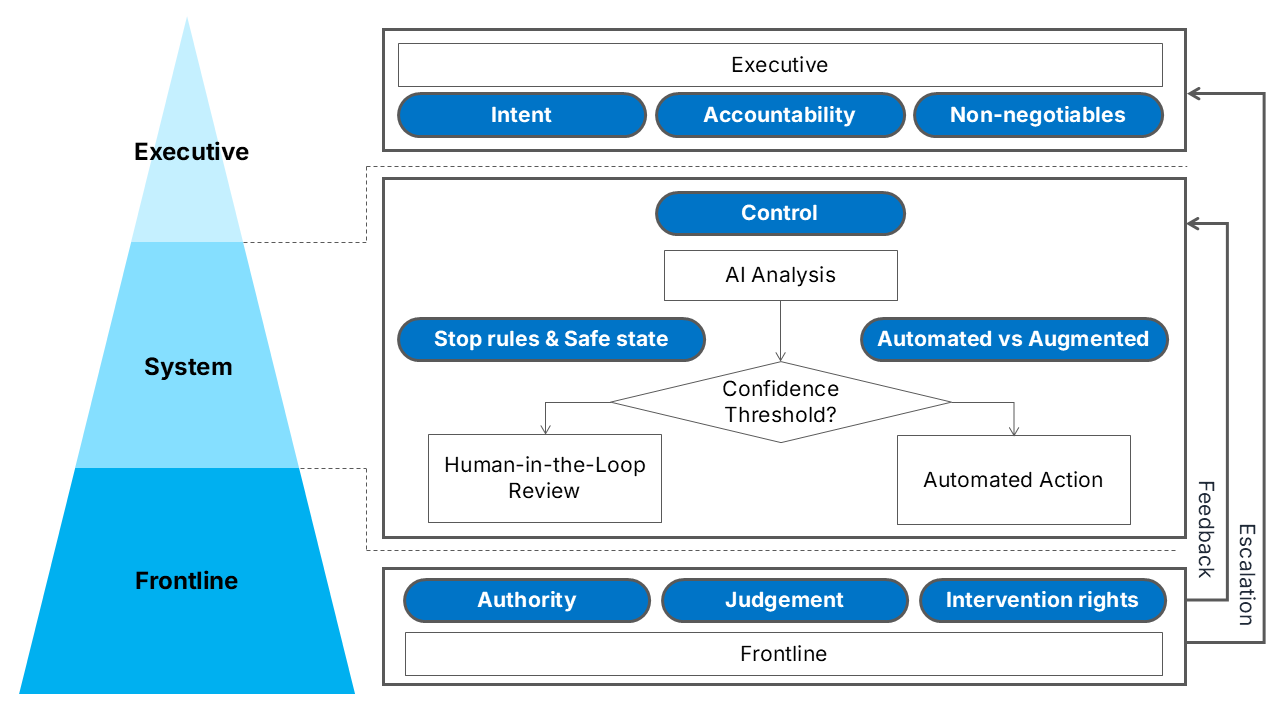

AI governance pyramid for physical AI

If AI governance is treated as compliance paperwork, it will fail in physical industries. What is required is a governance architecture that links executive accountability, system design and frontline authority — and remains robust as physical AI becomes more capable and more deployable. In operational terms, this can be understood as a three-layer governance pyramid.

At the apex sits executive governance: intent, accountability and the non-negotiables. Leaders must define why AI is being deployed — because of questions related to resilience, safety, continuity and productivity, and not for efficiency alone. They must set risk appetite for physical harm, service disruption and systemic fragility, and decide what cannot be delegated to an algorithm.

The middle layer is system governance: governance by design. This is where executive intent becomes engineered reality. It includes determining which decisions are automated, which are augmented and where human approval is mandatory; defining stop rules and safe states; specifying monitoring, incident reporting and change control. In physical operations, a model change can alter motion paths, workload balance and safety margins.

At the base sits frontline governance: authority, judgement and intervention rights. Workers require clear authority to override AI, the judgement to interpret confidence and constraints, and the right to intervene, supported by escalation paths that do not penalize action. Capability without decision rights is symbolic; decision rights without usable interfaces are ineffective. Frontline governance should also institutionalize learning loops so that governance improves with operational reality.

Governance as industrial infrastructure

As physical AI accelerates, technical capabilities will increasingly converge, but governance will not. Those that treat governance as an afterthought may see early gains, but will discover that scale amplifies fragility. The future of physical industries will be shaped by organizations that design governance early, before risk compounds at scale.

When AI becomes embodied, governance is no longer a constraint on innovation.

It is the foundation on which the next era of physical industries will rise.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Artificial Intelligence

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.