How will self-driving cars make life or death decisions?

Image: REUTERS/Elijah Nouvelage

Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:

Automotive and New Mobility

Nearly 60% of us would take a ride in a self-driving car, but the ethics of how an autonomous vehicle should act in certain situations is more complicated than you think. Should the car slam into a wall, killing the passengers, to save pedestrians? Or vice versa? What about if one group is elderly, and the other teenagers?

These are some of the questions a project undertaken at MIT aims to answer. The study puts people behind the wheel of a self-driving car and forces them to make ethical decisions in potentially deadly scenarios.

We don’t know what we want

Research suggests that autonomous vehicles will reduce the number of accidents, and therefore fatalities on our roads. A McKinsey&Company report says that crashes could fall by 90%, saving billions of dollars in healthcare alone.

But, autonomous vehicles will, at some point, be faced with decisions that will result in fatalities – regardless of their actions. Even for humans the ethics of this are muddled.

One study, published in Science, showed that while we agree that autonomous vehicles should sacrifice passengers to save others, we - not surprisingly - wouldn't want to ride in them.

What would you do?

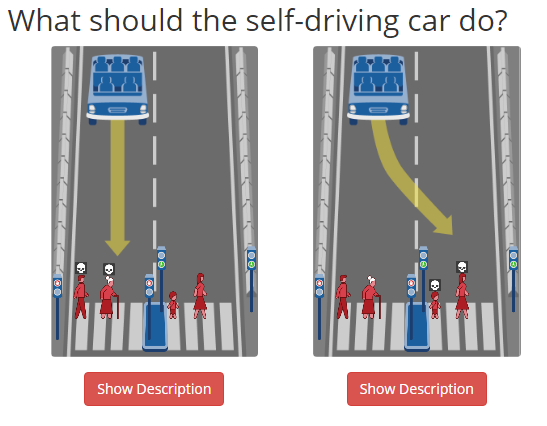

The MIT project explores these ethical conundrums in greater detail, by putting you behind the wheel of an autonomous car. The ‘Moral Machine’ presents various scenarios a self-driving car might face – and asks you decide what the vehicle should do.

For example, here you’re presented with an empty car, but either action will result in fatalities to pedestrians.

Or how about this situation? A full car, but animals on the crossing.

Finally, three pedestrians, three passengers. What should the car do? Note the added complication of the traffic lights on red.

These scenarios highlight the complexity of the problem faced by the makers of self-driving cars. If we, as humans, struggle to reach a consensus on how a machine should act in a specific scenario, how can we expect the machine itself to make such a decision?

As automation and machine learning becomes more prevalent, from ships and cars to weaponry, the MIT project emphasises the need for greater understanding and awareness.

"Machine intelligence is supporting or entirely taking over ever more complex human activities at an ever increasing pace. The greater autonomy given machine intelligence in these roles can result in situations where they have to make autonomous choices involving human life and limb. This calls for not just a clearer understanding of how humans make such choices, but also a clearer understanding of how humans perceive machine intelligence making such choices," the lead researchers write on their website.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda Weekly

A weekly update of the most important issues driving the global agenda

You can unsubscribe at any time using the link in our emails. For more details, review our privacy policy.

More on Fourth Industrial RevolutionSee all

Agustina Callegari and Daniel Dobrygowski

April 24, 2024

Christian Klein

April 24, 2024

Sebastian Buckup

April 19, 2024

Claude Dyer and Vidhi Bhatia

April 18, 2024