The digital world is accelerating and it’s time for tech standards to catch up

The digital age demands global, ethical tech standards

Image: Getty Images

Stay up to date:

Cybersecurity

- As digital technologies such as artificial intelligence and smart systems rapidly advance, systemic vulnerabilities, including data breaches, are exploding.

- Current efforts to regulate and standardize digital technology are fragmented and dominated by Global North perspectives.

- Ethical considerations should be embedded in the earliest stages of technology development, not treated as afterthoughts.

As artificial intelligence (AI) evolves, smart cities track movement and algorithms decide job interviews, the question confronting societies is no longer whether digital technology shapes our lives, but how responsibly it does.

Yet amid this rapid transformation, one element is blindingly absent: shared ethical tech standards for how these technologies are developed, deployed and governed. We’ve created a digital world without a blueprint for safety, fairness and long-term impact.

Why this matters now

Global investment in digital technologies continues to surge, but critical systemic vulnerabilities have become increasingly evident:

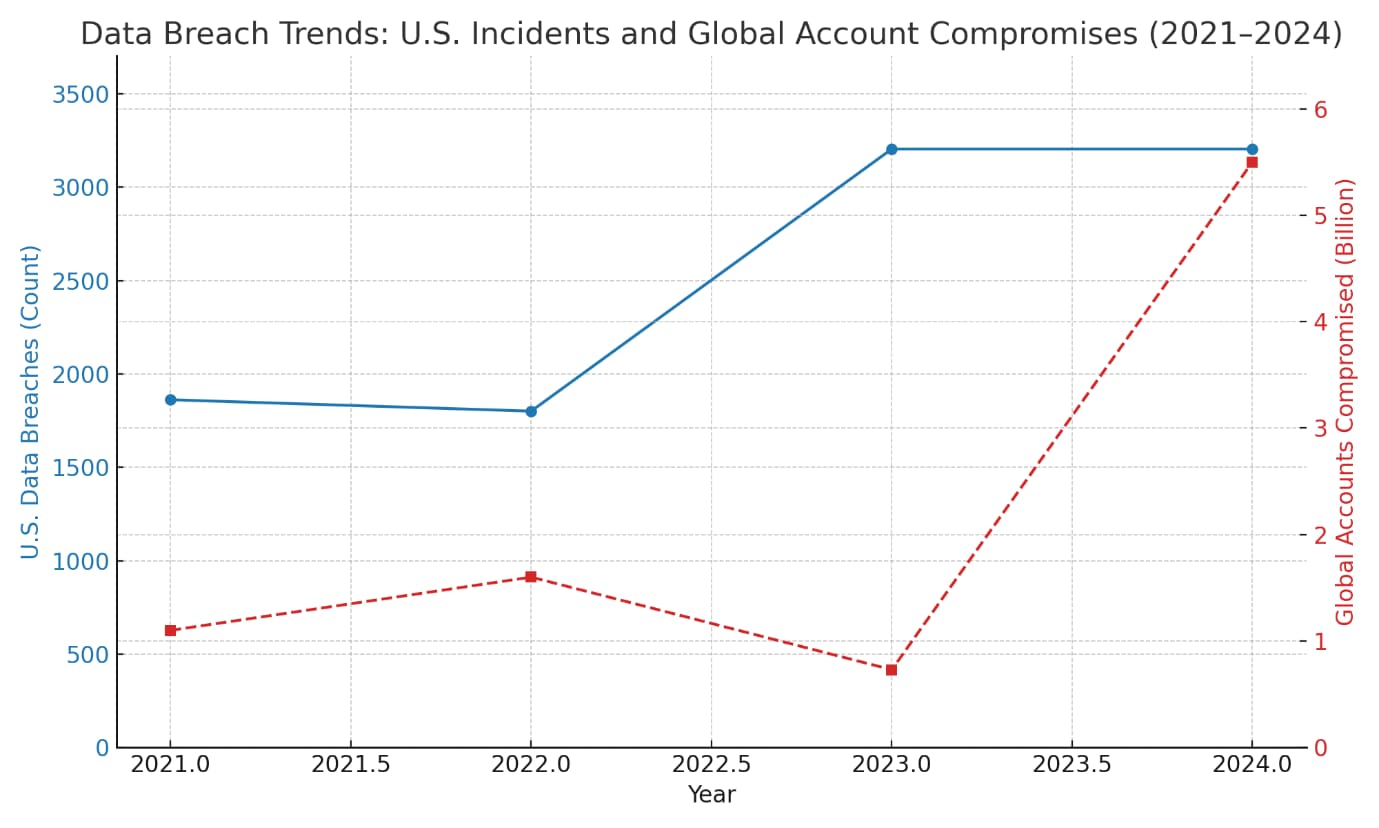

- In 2024, the Identity Theft Resource Centre recorded 3,205 data breaches in the United States, exposing nearly 12 billion records – a staggering 312% increase in victims compared to 2023.

- Globally, breached accounts rose from around 730 million in 2023 to over 5.5 billion in 2024, averaging 180 accounts compromised every second.

- High-profile incidents included the National Public Data broker breach in April 2024, which exposed 2.9 billion records and a massive 26 billion record leak known as the “Mother of All Breaches.”

These figures are not simply technical anomalies. They point to a structural failure in designing digital systems with ethics and resilience in mind.

Tech standards: The missing layer of trust

We expect rigorous standards in food safety, construction and aviation; therefore, why should AI or digital health be different?

Standards serve two critical purposes. First, they provide a common framework to ensure safety, transparency and accountability, especially when technologies cross borders. Second, they create market confidence by aligning stakeholders (from governments to investors) around shared expectations.

And yet, the standardization landscape for digital technology remains fragmented and reactive. According to the Organisation for Economic Co-operation and Development (OECD), less than 10% of emerging technologies are governed by international norms that address ethical or social risks.

Some promising efforts are emerging. The European Union’s AI Act takes a risk-based approach to regulating AI systems.

The World Economic Forum’s Centre for the Fourth Industrial Revolution is piloting governance sandboxes in collaboration with governments and companies worldwide. Non-profit institutions have also introduced engineering-oriented standards that prioritize ethical design principles in the early development stages.

However, these initiatives remain disconnected. Without global coordination, companies face a patchwork of rules – or worse, a regulatory void that prioritizes speed over integrity.

We are entering a phase where digital technologies will decide more than our search results. They will shape economies, public services and individual rights.

”Why tech standards must be inclusive and agile

Technology doesn’t exist in a vacuum; values shape it – but whose values?

Many current initiatives are dominated by voices from the Global North, raising concerns about digital colonialism. As emerging economies deploy AI in agriculture, education and public health, it is vital that standards reflect diverse cultural, economic and political contexts.

Moreover, traditional standards development can take years.

In a world where AI models evolve in weeks, we need processes that are faster, more collaborative and more dynamic. Agile governance – including open-source ethics tools, dynamic testing environments and iterative stakeholder consultation – offers one possible model.

3 priorities for a safer digital future

Here are three actionable steps to accelerate the responsible scaling of digital technology:

1. Make ethics a design input, not an afterthought

Companies must move beyond checklists and integrate human rights, social impact and long-term risks at the outset of technology development. Proven methodologies such as value-sensitive design and ethical impact assessments can guide this process.

In practice, this means product teams should collaborate with ethicists, civil society and affected communities before launching new systems. Several industry-led standards already offer such frameworks. The challenge now is scale and enforcement.

2. Link standards to incentives

Standards don’t just protect the public; they protect companies too. By aligning digital ethics with environmental, social and governance (ESG) criteria, investors can reward companies that proactively adopt best practices.

Governments can also play a role. By linking procurement contracts, research and development grants and regulatory approvals to the use of internationally recognized standards.

3. Use multistakeholder platforms to coordinate globally

No single government or company can manage digital risks alone. Institutions such as the World Economic Forum, the OECD and UN Educational, Scientific and Cultural Organization (UNESCO) are uniquely positioned to foster coordination across borders and sectors.

The Forum’s Global Future Councils and AI Governance Alliance offer promising spaces for such collaboration but their recommendations must be adopted by decision-makers at scale.

Standards are a public good

We are entering a phase where digital technologies will decide more than our search results. They will shape economies, public services and individual rights. To navigate this responsibly, we need tech standards that are ethically grounded, globally recognized and future-proof.

Ethical design shouldn’t be a competitive disadvantage. It should be the baseline for innovation. The choice before us is clear: build digital technologies with intention and inclusivity or risk eroding public trust and social cohesion.

The time to act has never been more urgent.

Accept our marketing cookies to access this content.

These cookies are currently disabled in your browser.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Emerging TechnologiesSee all

Valeriya Ionan

July 28, 2025

Ivan Shkvarun

July 25, 2025

Anil Gupta and Wang Haiyan

July 25, 2025

Mark Esposito

July 24, 2025

Anthony Cano Moncada

July 23, 2025

Manikanta Naik and Murali Subramanian

July 23, 2025