Is this how you can ensure climate justice in the age of AI?

AI must be used to ensure climate justice for vulnerable people worldwide Image: Photo by Azzedine Rouichi on Unsplash

- From predicting extreme weather to optimizing energy systems, climate action increasingly incorporates AI to boost its effectiveness.

- Climate justice must be deeply embedded in the design and deployment of AI where it's used to enhance sustainability.

- As we stand at the intersection of technological innovation and environmental urgency, we must ask: who benefits, who is left behind and who gets to decide?

Artificial intelligence (AI) has permeated all walks of life, including climate action. From predicting extreme weather to optimizing energy systems, climate action increasingly incorporates AI to boost its effectiveness. There’s a growing imperative, however, to ensure that emerging technologies don’t intensify existing inequalities or further marginalize vulnerable groups. Climate justice is about fair treatment and meaningful involvement of all people in a world impacted by climate change, regardless of their background, income, race, gender, or geography. It must be deeply embedded in the design and deployment of AI for sustainability.

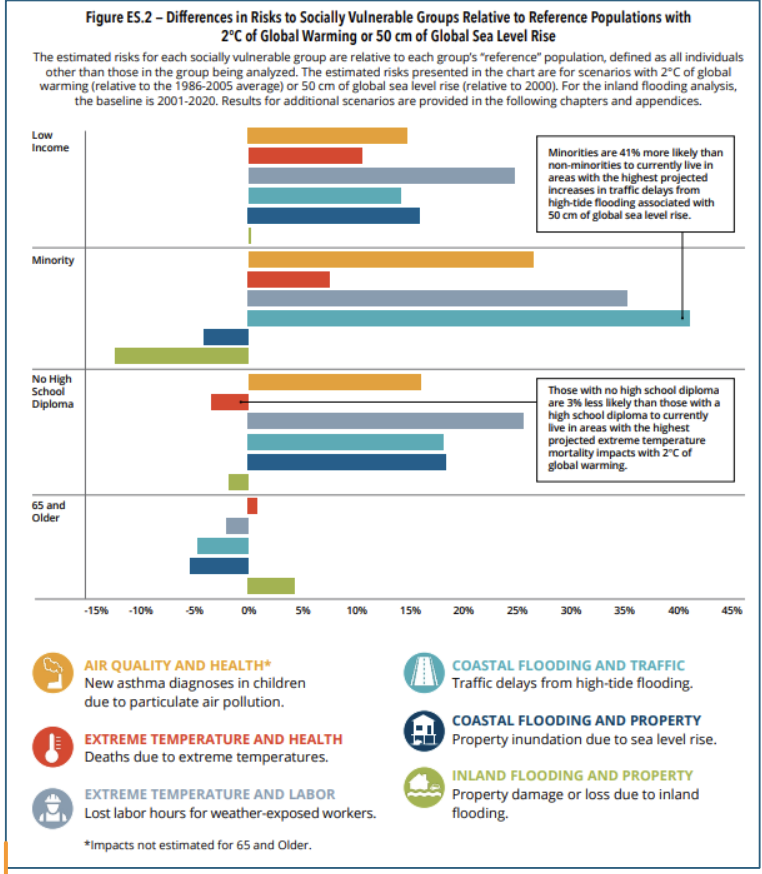

This conversation needs urgent reckoning as two transformative forces converge: the climate crisis and the burgeoning AI revolution. A 2021 US Environmental Protection Agency report found that socially vulnerable populations, defined by race, income and age, are more likely to suffer severe harms from climate change, such as extreme heat, poor air quality and flooding.

Simultaneously, as AI increasingly influences decisions about public services, infrastructure and risk management, concerns grow that without deliberate equity efforts and safeguards, AI may become a new vector of exclusion and deepen inequalities.

This is no longer hypothetical. AI is already used in critical environmental contexts like disaster relief, pollution monitoring and energy management. If trained on biased data or designed without marginalized community input, these systems can reinforce the very disparities climate justice seeks to dismantle.

Here is how climate justice can be reinforced when deploying AI:

Design for fairness and tackle bias in environmental AI

An AI system can only be as fair as the data and assumptions it’s built upon. In climate action, algorithmic bias can manifest subtly, yet harmfully. If an AI model, for instance, determines air-quality sensor placement using historical complaints logged via smartphones or laptops, it may overlook poorer or rural communities with limited or no internet access, leading to under-monitoring and under-resourcing.

This isn’t just a technical flaw; it’s a justice issue. AI must be designed to ensure societal benefits are equitably distributed. It must take into consideration the lack of access to technology and resources among marginalized communities.

For just, sustainable development, AI solutions must be built on foundational data that includes those excluded from digital ecosystems, eliminating bias in deployment. Transparency is also critical. Communities must be able to understand how decisions are made, challenge them when necessary and participate in shaping the tools that affect their lives. This means opening up AI models to public scrutiny and embedding participatory design processes from the outset. It must also include algorithmic impact assessments, such as environmental impact assessments, to evaluate the societal and environmental impacts of AI.

Empower marginalized communities through data sovereignty

Data is power and history shows that power hasn’t been equitably shared. Indigenous and marginalized communities often hold deep ecological knowledge that can enhance AI-driven climate solutions. Yet, they’re often excluded from decision-making and treated merely as data sources.

Consider this: if a deforestation initiative, powered by AI, involves collecting and analysing satellite imagery and sensor data of a region, but fails to consult local, indigenous populations, conservation efforts may be redundant. Mistakes can easily be made if you don't incorporate traditional ecological knowledge with AI analysis. Due to rotational harvest cycles and seasonal browning designed by Indigenous Peoples for sustainability, for example, a region may be flagged as 'at risk' even when it is not, as this is something that can't be interpreted by satellite data alone, it needs local, indigenous knowledge.

Policy frameworks that harness AI for localized justice are critical. They must empower Indigenous communities with control over their data and provide training to complement AI models. Community-driven approaches, especially from poorer, Indigenous populations, are essential for equitable climate interventions.

The UN’s 2024 report on AI governance emphasizes the need for inclusive global frameworks that protect human rights and ensure that AI serves all communities. This includes respecting data sovereignty, which is giving communities control over how their environmental data is collected, interpreted and used. In the Pacific Island nations, for example, community-led drone initiatives are actively monitoring coastal erosion and offering insights for climate adaptation. Meanwhile, in Canada, First Nations are advancing data sovereignty efforts to ensure environmental modelling supports their communities, rather than enables surveillance. Both are instances where AI is being adapted for Indigenous knowledge, cultural values and local priorities.

Foster accountability and ensure just regulatory frameworks

The backbone of ethical AI will always be ethical governance models that hold private and public actors accountable when AI-driven climate decisions cause disproportionate harms. International cooperation, environmental impact assessments for AI algorithms (and algorithmic impact assessments) and oversight are critical when leveraging AI to deliver climate justice.

Alongside this, when AI-driven climate decisions cause disproportionate harm, there must be mechanisms for redress. For example, if an AI-powered system optimizes waste collection routes to improve efficiency and reduce emissions, it could lead to poorer neighbourhoods with more minority residents experiencing less frequent collections. There should be a way for those residents to raise the issue through accessible grievance mechanisms and there should be mandatory impact assessments for such systems with effective transparency standards.

The UN’s Advisory Body on Artificial Intelligence has called for a globally inclusive architecture for AI governance, emphasizing agility, adaptability and human rights protection. These principles are especially critical in climate contexts, where decisions can have life-altering consequences. Moreover, these frameworks must be enforceable. Voluntary guidelines are not enough when the stakes are this high. As AI becomes more embedded in public infrastructure and climate policy, regulatory oversight must keep pace.

A 'just climate' future requires 'just' technology

AI holds immense promise for accelerating climate solutions, but only if wielded with care, foresight and justice. As we stand at the intersection of technological innovation and environmental urgency, we must ask: who benefits, who is left behind and who gets to decide?

Ensuring climate justice in the age of AI means embedding fairness, participation and accountability into every layer of these systems. It means listening to those most affected, sharing power and designing with, not just for, communities. Only then can AI truly serve as a tool for inclusive and sustainable transformation.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Artificial Intelligence

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Sustainable DevelopmentSee all

Pauline McCallion

January 23, 2026