How to build a strong foundation for AI agent evaluation and governance

Organizations need to take a structured approach to AI agent assessment and adoption. Image: Getty Images/Quardia

Benjamin Larsen

Initiatives Lead, AI Systems and Safety, Centre for AI Excellence, World Economic Forum- As more organizations adopt artificial intelligence (AI) agents, they must properly define their roles and put safeguards and oversight in place.

- Recent research outlines four foundational pillars for a structured approach to AI agent assessment and adoption.

- This approach will help organizations to continue to guide AI agents responsibly, even as their capabilities grow.

Artificial intelligence (AI) agents have been rapidly shifting from experimental prototypes to integrated collaborators in enterprises, public services and daily life. These AI-powered systems can be built to independently interpret information, make decisions and carry out actions to achieve specific goals. More capable systems will continue to emerge in the years to come, with implications for people, businesses and society.

As organizations adopt AI agents to augment teams or act in the physical world, they should treat their onboarding with the same rigour as a new employee. This includes developing well-defined roles, safeguards and structured oversight practices.

A white paper, AI Agents in Action: Foundations for Evaluation and Governance, developed by the World Economic Forum’s Safe Systems and Technologies working group in collaboration with Capgemini, outlines the emerging foundations for responsible adoption of AI agents. It introduces four pillars that provide a structured approach to AI agent assessment and adoption:

1. Classification

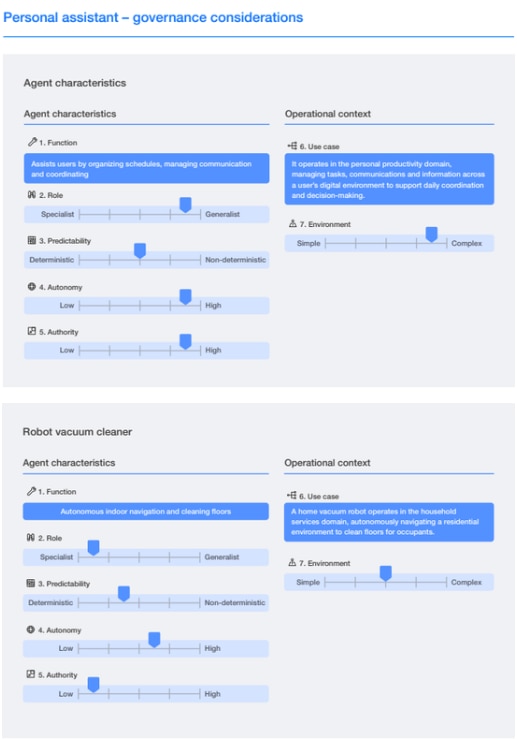

A structured approach to agent classification helps decision-makers articulate what an agent does, how it behaves and under what conditions it should operate. An agent card, or a “resume” for the AI agent, can provide critical information about the agent’s capabilities before it is “onboarded” and integrated into an organization.

Seven core dimensions should define an agent’s profile:

- Function: What task is the agent designed to perform?

- Role: Is it highly specialized or a generalist?

- Predictability: Is it deterministic or non-deterministic?

- Autonomy: To what degree can it plan, decide and act independently?

- Authority: What permissions or system access is it granted?

- Use case: In what domain or workflow is it deployed?

- Environment: How simple or complex is the operating context?

When combined, these dimensions generate a behavioural and operational understanding of the agent, which is critical for assessing risks, setting oversight levels and communicating expectations between providers, adopters and end users.

The concept of autonomy is very different from earlier forms of automation. The key distinction is that autonomy entails decision-making flexibility, or choosing what to do, whereas automation emphasizes execution reliability, or doing what the system is programmed to do. Autonomy and authority should not be treated as inherent system properties, but as design choices that can be made based on the agents’ intended functions, risk considerations and oversight requirements.

A robot vacuum cleaner is low-authority, medium-autonomy and operates in a relatively simple environment, for example, whereas a personal digital assistant could span multiple systems, access sensitive data across platforms and perform actions on behalf of users. As such, a personal assistant requires much more scrutiny:

2. Evaluation

As organizations begin deploying agents with different functional roles, structured evaluation becomes more important. Traditional AI evaluation relies heavily on model-centric benchmarks, testing accuracy or reasoning in controlled scenarios. But agents are not static models. They are systems made up of one or more models plus tools, memory, feedback loops and user interactions – all within varying environments.

Evaluating an AI agent therefore means examining dimensions such as task success rates, tool-use reliability, performance, behaviour over time, user trust and interaction patterns, and operational robustness across real-world workflows.

Emerging benchmarks such as AgentBench or SWE-bench provide early signals, but real assurance comes from contextual evaluation. This means testing agents in environments that mirror actual deployment conditions. As such, developers (who have know-how of the system) and adopters (who have data and expertise related to the operational context) often need to collaborate closely to ensure accurate and reliable in-context evaluation.

3. Risk assessment

While evaluation supplies evidence, risk assessment determines what that evidence means for safety and suitability. Generally, a five-step risk assessment life cycle moves across defining the context, identifying, analysing, evaluating and managing associated risks.

This approach connects classification and evaluation directly to governance. For example, high-authority, high-autonomy agents in complex environments require more safeguards. Non-deterministic behaviour must also be paired with stricter monitoring. And agents interacting with external systems must operate under zero-trust security assumptions because their integrations expand the attack surface.

This structured approach is essential as agents increasingly operate across organizational boundaries, invoke external tools and collaborate with other agents through interoperability protocols.

4. Progressive governance

Evaluation and risk assessment provide critical insights into an agent’s capabilities, performance, reliability, security, safety and alignment. On the other hand, governance determines whether those insights translate into effective oversight and responsible adoption.

The white paper outlines a progressive approach to agent oversight, starting with a baseline for all agents that includes logging and traceability, clear identity tagging for every agent action and real-time monitoring (increasingly relying on agents to monitor agents).

A progressive approach to governance ensures that more capable agents receive proportional oversight, while still enabling innovation and flexibility in scaling over time.

Building multi-agent AI ecosystems

The next frontier for AI agents goes far beyond the integration of individual agents within organizations. It involves emerging ecosystems of agents that interact across networks, platforms and organizations. This means organizations starting to adopt and deploy agents today should consider issues such as:

- Orchestration drift: When agents are plugged into other agents without shared context or coordination logic, workflows can become brittle or unpredictable.

- Semantic misalignment: When two agents interpret the same instruction differently, it can lead to conflicting actions or duplicated effort, with implications for safety, reliability and coordination.

- Security and trust gaps: Without shared trust frameworks, agents may inadvertently expose sensitive data or interact with malicious actors, exploiting vulnerabilities in the system.

- Interconnectedness and cascading effects: Failures in tightly linked agents or systems can propagate across networks, creating a chain of disruptions.

- Systemic complexity: As the number and diversity of interacting agents grow, the likelihood of emergent behaviours and cascading failures increases, making them more difficult to anticipate, trace or diagnose.

These emerging issues make forward-looking oversight and progressive governance essential. New approaches such as using auditor agents can help monitor, validate and regulate agent ecosystems at scale. They also create new vulnerabilities, however, including the risk of depending on agents to monitor other agents.

A responsible plan for deploying AI agents

AI agents are already delivering value across industries – from speeding up R&D and customer service to helping organizations manage complex operations. But as their capabilities grow, so should organizations’ ability to guide them responsibly.

The foundations introduced in the white paper relating to classification, evaluation, risk assessment and progressive governance, offer a structured blueprint for organizations preparing for an agentic future today. With careful design, proportionate safeguards and cross-functional collaboration, AI agents can amplify human capability, unlock productivity and support high-trust, high-impact digital ecosystems.

The long-term success of AI agent adoption and use, however, depends on building systems that remain understandable, reliable and accountable. These systems must be built as shared tools for long-term value creation.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Artificial Intelligence

Related topics:

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Artificial IntelligenceSee all

Cathy Li

December 19, 2025