Why effective AI governance is becoming a growth strategy, not a constraint

AI governance pillars strengthens customer confidence, regulatory readiness and long-term competitiveness Image: Freepik/DC Studio

- Organizations that embed governance early avoid fragmentation, duplication and risk, allowing artificial intelligence initiatives to scale faster and more reliably.

- Responsible, ethical and trustworthy AI strengthens customer confidence, regulatory readiness and long-term competitiveness.

- Clear accountability, transparency, fairness and integrity must be built into everyday workflows, system design and decision-making rather than left as policy statements.

Business leaders often talk about artificial intelligence (AI) governance as if it’s a speed bump on the road to high-impact innovation, slowing everything down while competitors sprint ahead unfettered.

The truth is that governance provides the traction for acceleration while keeping your business on the road and from veering off-course. Getting governance right from the start helps you drive in the fast lane and stay there.

And without good governance, AI initiatives tend to fragment. They can get stuck in data silos, incomplete processes, inadequate monitoring, undefined roles, duplication of effort and inefficient use of resources. The benefits you seek can quickly transform into potentially negative consequences.

As AI moves from experimentation to enterprise-scale deployment, governance is thus now a critical driver of sustainable growth, providing the trust, clarity and accountability needed to scale intelligent systems responsibly.

By embedding responsibility, transparency and ethical oversight into the architecture of AI, organizations can unlock business value while strengthening public and stakeholder confidence. In an economy increasingly shaped by intelligent systems, governance is more than a safeguard; it is a strategic advantage.

The 3 pillars of effective AI governance

Effective AI governance is a comprehensive framework that bridges and combines strategies, policies and processes, connecting business ambition, ethical intent and operational execution into a coherent system, ensuring AI can be trusted and scaled responsibly.

To foster trust in AI systems and confidently scale AI across your business, leaders must focus on these three pillars:

- Responsible AI focuses on preventing harm and acknowledging that AI affects individuals, institutions and society in ways we can’t afford to ignore. It means proactively reducing threats to human rights, social values and environmental wellbeing. AI should not cause collateral damage as it scales.

- Ethical AI is harder to pin down because ethical standards can vary by culture, context and regional values. Organizations need AI policies that reflect the ethical values of their stakeholders and these policies must be transparent.

- Trustworthy AI is about building trust. Does the system work as intended, reliably and without bias? Can people interrogate it? Can it explain itself? Trust is earned through rigorous testing, monitoring, documentation and transparency.

Unlocking value faster

At first glance, governance seems to be all about preventing harm, but that’s only part of the story, albeit still vitally important. The business and customer value come from the power of AI governance to unlock sustainable growth.

It can do this by improving customer engagement, opening new revenue streams and ensuring that AI initiatives are thoroughly vetted for safety and business impact. This dual focus – social and business value – pushes organizations higher on the AI value chain.

Here are five prime focal points for balancing responsible AI with measurable business outcomes:

- Accountability: Clarify roles, responsibilities and human oversight so people can be held accountable for AI outcomes.

- Fairness: Design and deploy AI to support inclusion and well-being by proactively identifying and mitigating harmful bias.

- Privacy: Strengthen data governance with tools and processes that protect privacy and data integrity across the AI lifecycle.

- Transparency: Enable clear stakeholder communication, interpretable AI outcomes and auditability of AI systems.

- Integrity: Build reliable and secure AI by preventing misuse and continuously validating models to ensure accurate, trustworthy results.

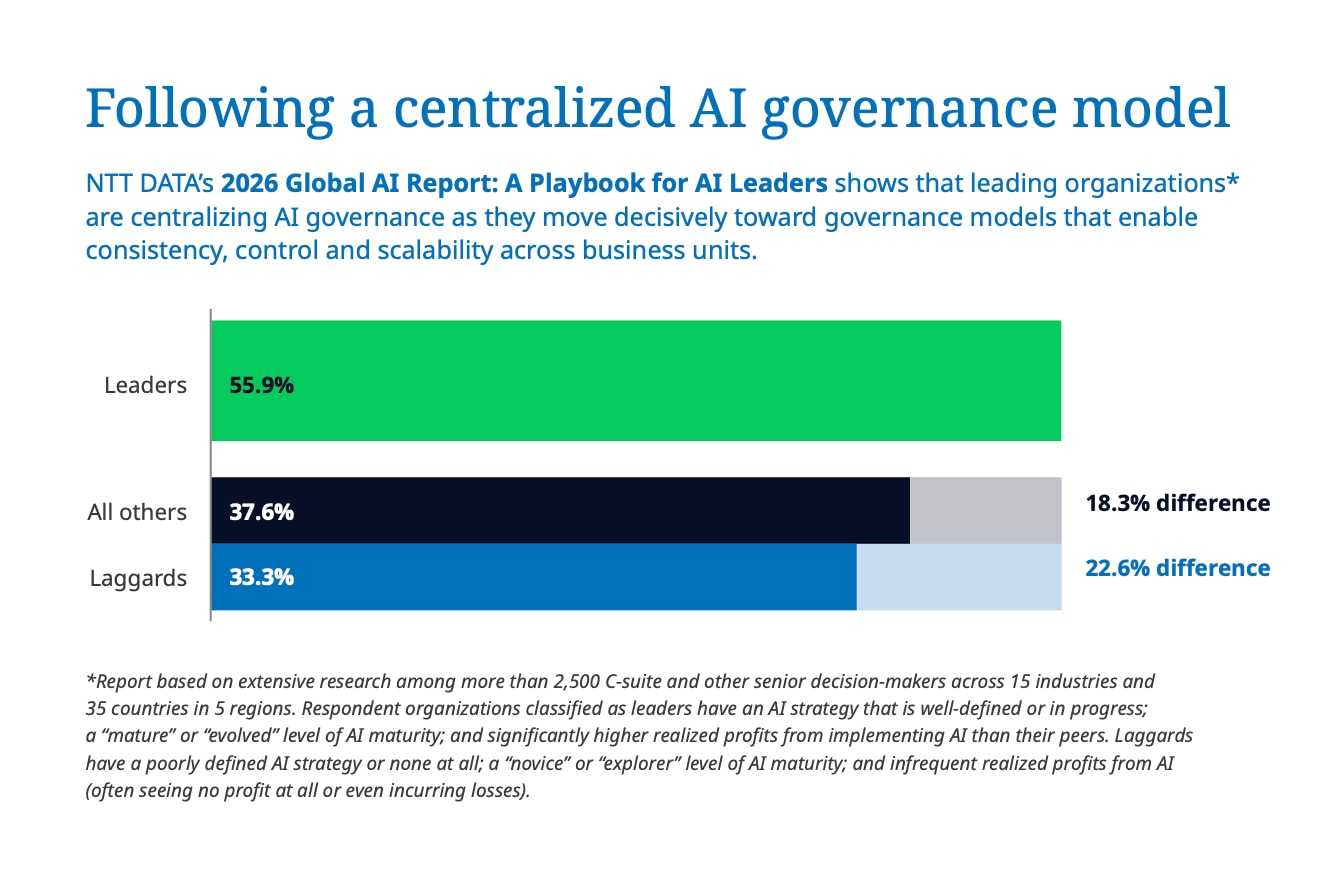

This broad scope is why many leading organizations have established governance offices, review boards, safety councils and operational AI teams, as well as appointing chief AI officers. Rather than creating paperwork, the point is to translate policy into a glide path for effective action and repeated innovation.

How the Forum helps leaders make sense of AI and collaborate on responsible innovation

AI governance roadmap

Once the principles, standards and teams are in place, the work of embedding AI governance across operations can begin. There are three primary milestones for comprehensive and actionable AI governance:

- AI maturity assessment: Evaluate your current AI capabilities, strengths, gaps and readiness. The goal is to create a clear baseline that aligns with long-term business and governance goals.

- Customized AI blueprint: The next step is to create a blueprint that outlines the strategic initiatives, milestones and resources you will need to build a robust, future-proofed AI framework. Elements include structures, processes and policies that ensure ongoing oversight, continuous improvement and alignment with ethical, legal and organizational requirements.

- Governance implementation: This is where the rubber hits the road as you embed governance into everyday work.

The bottom line

Executives want speed, but they need a steering wheel. Governance is how you steer toward your goals, responsibly, sustainably and ethically with trust at the core.

To get ahead and stay ahead with AI, organizations must build governance into their operating architecture before driving AI into their applications. That’s how you move fast without breaking your business and it’s how powerful AI becomes dependable, trustworthy and fair even as it transforms your business and our world.

Learn more about this topic with NTT DATA’s guide for "Mastering AI governance: Empowering organizations to lead with responsible AI."

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Artificial Intelligence

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Artificial IntelligenceSee all

Jeremy Jurgens

February 27, 2026