Robots in war: the next weapons of mass destruction?

Image: A Ukrainian serviceman operates a drone during a training session outside Kiev, November 6, 2014. REUTERS/Valentyn Ogirenko

Stuart Russell

Professor of Computer Science and Director of the Center for Human-Compatible AI, University of California, BerkeleyCatch up with the debate on automated weapons and killer machines in the session What If Robots Go to War. It'll be livestreamed from Davos at 15.00 on Thursday 21 January 2016.

Imagine turning on the TV and seeing footage from a distant urban battlefield, where robots are hunting down and killing unarmed children. It might sound like science fiction – a scene from Terminator, perhaps. But unless we take action soon, lethal autonomous weapons systems – robotic weapons that can locate, select and attack human targets without human intervention – could be a reality.

I’m not the only person worried about a future of “killer robots”. In July, over 3,000 of my peers in artificial intelligence and robotics research – including many members of the Forum’s Global Agenda Council on Artificial Intelligence and Robotics – signed an open letter calling for a treaty to ban lethal autonomous weapons. We were joined by another 17,000 signatories from fields as diverse as physics, philosophy and law, including Stephen Hawking, Elon Musk, Steve Wozniak and Noam Chomsky.

The letter made headlines around the world, with more than 2,000 media articles in over 50 countries. So what is the debate about?

A new breed of robots

We’re all familiar, in varying degrees, with three pieces of modern technology:

1. The self-driving car: You tell it where to go and it chooses a route and does all the driving, “seeing” the road through its onboard camera.

2. Chess software: You tell it to win and it chooses where to move its pieces and which enemy pieces to capture.

3. The armed drone: You fly it remotely through a video link, you choose the target, and you launch the missile.

A lethal autonomous weapon might combine elements of all three: imagine that instead of a human controlling the armed drone, the chess software does, making its own tactical decisions and using vision technology from the self-driving car to navigate and recognize targets.

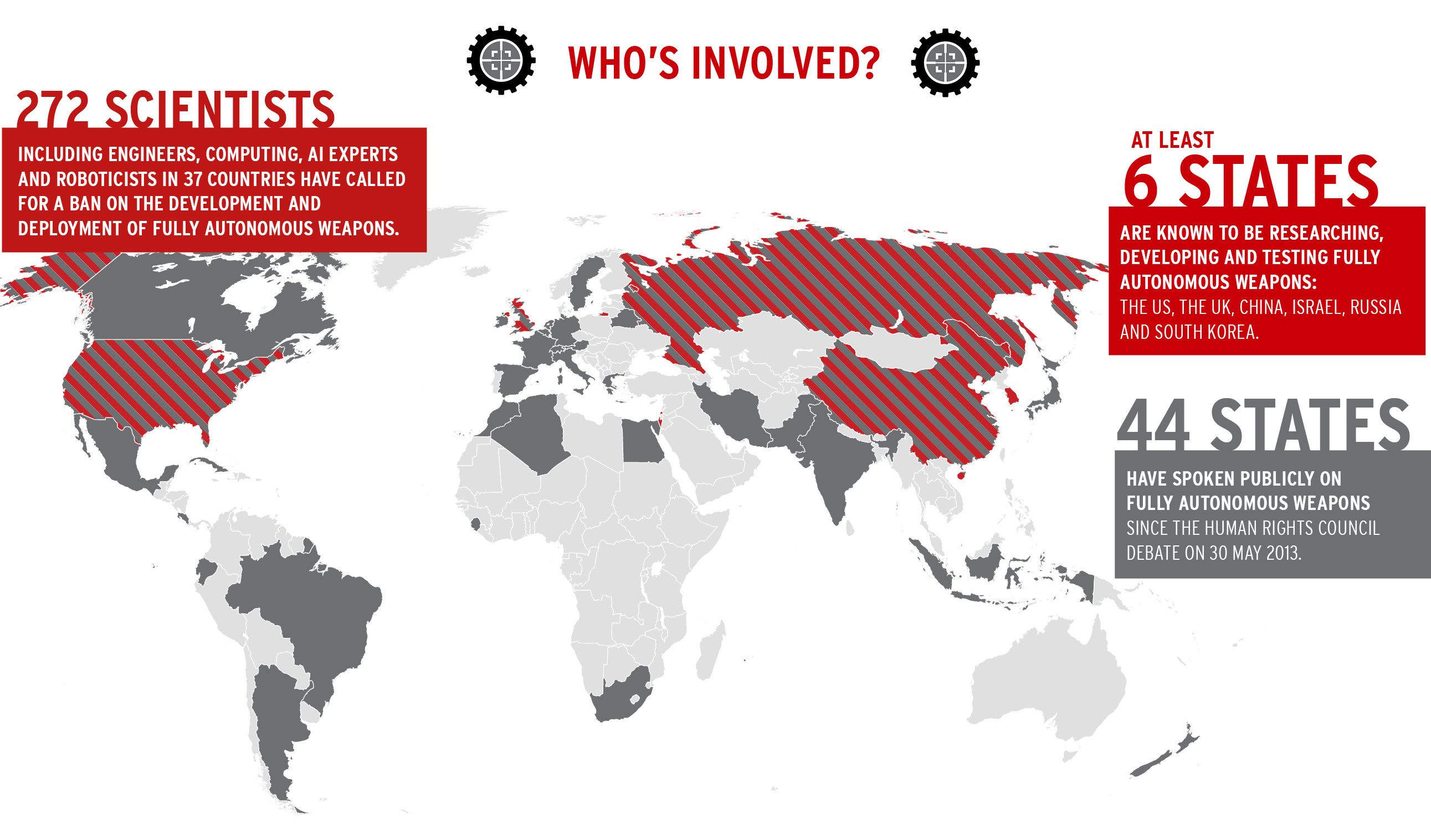

In the UK – one of at least six states researching, developing and testing fully autonomous weapons – the Ministry of Defence has said that such weapons are probably feasible now for some aerial and naval scenarios. Two programmes from the US Defense Advanced Research Project Agency (DARPA) already provide clues as to how autonomous weapons might be used in urban settings. The Fast Lightweight Autonomy programme will see tiny rotorcraft manoeuvre unaided at high speed in urban areas and inside buildings. The Collaborative Operations in Denied Environment programme plans to create teams of autonomous aerial vehicles that could carry out every step of a strike mission in situations where enemy jamming makes communication with a human commander impossible. In a press release describing the programme, DARPA memorably compared it to “wolves hunting in coordinated packs”.

Source: Campaign to Stop Killer Robots

There is no doubt that as the technology improves, autonomous weapons will be highly effective. But does that necessarily mean they’re a good idea?

Robotic outlaws

We might think of war as a complete breakdown of the rule of law, but it does in fact have its own legally recognized codes of conduct. Many experts in this field, including Human Rights Watch, the International Committee of the Red Cross and UN Special Rapporteur Christof Heyns, have questioned whether autonomous weapons could comply with these laws. Compliance requires subjective and situational judgements that are considerably more difficult than the relatively simple tasks of locating and killing – and which, with the current state of artificial intelligence, would probably be beyond a robot.

Even those countries developing fully autonomous weapons recognize these limitations. In 2012, for example, the US Department of Defense issued a directive stating that such weapons must be designed in a way that allows operators to “exercise appropriate levels of human judgement over the use of force”. The directive specifically prohibits the autonomous selection of human targets, even in defensive settings.

Life-saving robot warriors?

But some robotics experts, such as Ron Arkin, think that lethal autonomous weapons systems could actually reduce the number of civilian wartime casualties. The argument is based on an implicit ceteribus paribus assumption that, after the advent of autonomous weapons, the specific killing opportunities – numbers, times, locations, places, circumstances, victims – will be exactly the same as would have occurred with human soldiers.

This is rather like assuming cruise missiles will only be used in exactly those settings where spears would have been used in the past. Obviously, autonomous weapons are completely different from human soldiers and would be used in completely different ways – for example, as weapons of mass destruction.

Moreover, it seems unlikely that military robots will always have their “humanitarian setting” at 100%. One cannot consistently claim that the well-trained soldiers of civilized nations are so bad at following the rules of war that robots can do better, while at the same time claiming that rogue nations, dictators and terrorist groups are so good at following the rules of war that they will never use robots in ways that violate these rules.

Beyond these technological issues of compliance, there are fundamental moral questions. The Martens Clause of the Geneva Conventions – a set of treaties that provide the framework for the law of armed conflict – declares that, “The human person remains under the protection of the principles of humanity and the dictates of public conscience.” It’s a sentiment echoed by countries such as Germany, which has said it “will not accept that the decision over life and death is taken solely by an autonomous system”.

At present, the public has little understanding of the state of technology and the near-term possibilities, but this will change once video footage of robots killing unarmed civilians starts to emerge. At that point, the dictates of public conscience will be very clear but it may be too late to follow them.

Robots of mass destruction

The primary strategic impact of autonomous weapons lies not so much in combat superiority compared to manned systems and human soldiers, but in their scalability. A system is scalable if one can increase its impact just by having lots more of it; for example, as we scale nuclear bombs from tons to kilotons to megatons, they have much more impact. We call them weapons of mass destruction for a good reason. Kalashnikovs are not scalable in the same sense. A million Kalashnikovs can kill an awful lot of people, but only if carried by a million soldiers, who require a huge military-industrial complex to support them – essentially a whole nation-state.

A million autonomous weapons, on the other hand, need just a few people to acquire and program them – no human pilots, no support personnel, no medical corps. Such devices will form a new, scalable class of weapons of mass destruction with destabilizing properties similar to those of biological weapons: they tip the balance of power away from legitimate states and towards terrorists, criminal organizations, and other non-state actors. Finally, they are well suited for repression, being immune to bribery or pleas for mercy.

Autonomous weapons, unlike conventional weapons, could also lead to strategic instability. Autonomous weapons in conflict with other autonomous weapons must adapt their behaviour quickly, or else their predictability leads to defeat. This adaptability is necessary but makes autonomous weapons intrinsically unpredictable and hence difficult to control. Moreover, the strategic balance between robot-armed countries can change overnight thanks to software updates or cybersecurity penetration. Finally, many military analysts worry about the possibility of an accidental war – a military “flash crash”.

Where now?

The UN has already held several meetings in Geneva to discuss the possibility of a treaty governing autonomous weapons. Ironing out the details of a treaty will be quite a challenge, though not impossible. Perhaps more complicated are the issues of treaty verification and diversion of dual-use technology. Experience with the Biological and Chemical Weapons Conventions suggests that transparency and industry cooperation will be crucial.

The pace of technological advances in the area of autonomy seems to be somewhat faster than the typical process of creating arms-control treaties – some of which have been many decades in development. The process at present is balanced on a knife-edge: while many nations have expressed strong reservations about autonomous weapons, others are pressing ahead with research and development. International discussions over the next 12 to 18 months will be crucial. Time is of the essence.

Author: Stuart Russell is a Professor of Computer Science at the University of California Berkeley. He is participating in the World Economic Forum’s Annual Meeting in Davos.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Drivers of War

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Emerging TechnologiesSee all

Dr Gideon Lapidoth and Madeleine North

November 17, 2025