4 rules to stop governments misusing COVID-19 tech after the crisis

How do we limit the use, and misuse, of our data once this crisis recedes? Image: Reuters/Francis Mascarenhas

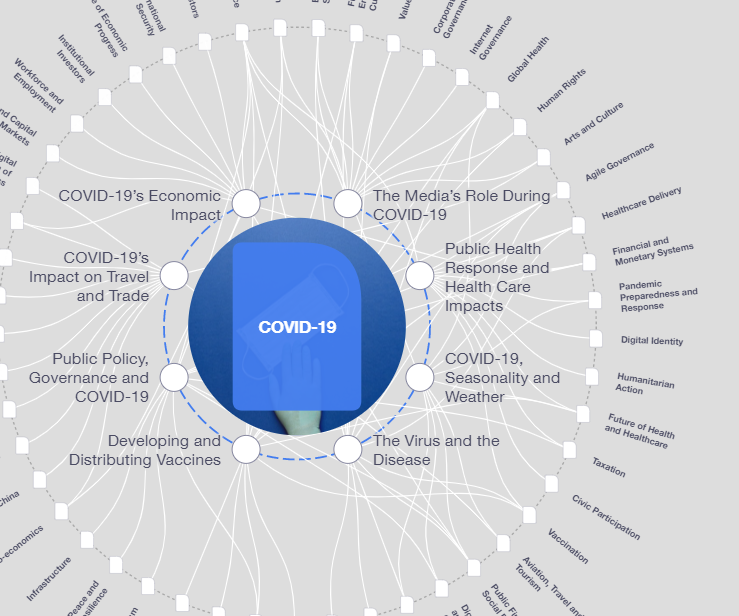

Explore and monitor how COVID-19 is affecting economies, industries and global issues

Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:

COVID-19

• Most people concede that public health currently trumps individual rights when it comes to the use of coronavirus data.

• We must consider the use of this data, and the related technologies, once the crisis recedes.

• Technology-driven responses to crises must be underpinned by human rights.

As COVID-19 continues its global surge, a number of governments, scientists and technologists have been exploring how technology, including artificial intelligence, could be used to help “flatten” the novel coronavirus’s curve.

To date, the most prominent examples of technology-driven solutions to the pandemic have focused on enhancing public health surveillance. All over the world and in countries like China, India, Israel, Singapore and throughout Europe, governments have availed themselves of emergency measures to collect data from CCTV cameras, cell phones and credit-card transactions in order to track infected patients, their movements and encounters.

The information gathered about disease exposure can be used to implement – and enforce – targeted social distancing measures based on perceived level of risk. Without a compelling public health justification, this can cause a disproportionate negative impact on human rights. Under usual circumstances, governments would require an individual’s permission or court orders to obtain user data from telecommunications or tech companies.

In the current pandemic environment, human rights law recognizes, and most of us would readily concede, that temporary limitations on privacy and the freedom of movement may be necessary to protect the health and safety of hundreds of millions. Governments, universities and companies have been working to enable COVID-19 testing and contact tracing in communities. Motivating these efforts is the belief that with better understanding of who has been infected, and who has been in close proximity to infected persons, we should be able to limit the spread of the disease more effectively and more efficiently. Yet before deploying related technological tools broadly, we need to understand and address the immense risks that they can pose.

The question before us is not just the public health benefit of increased surveillance and loss of privacy during times of pandemic emergency. What we must consider also is how we can limit the use, and misuse, of associated data and tools once this crisis recedes. Unless we exercise great care, broad societal acceptance of technological solutions that compromise privacy in time of emergency can generate new tools for government tracing and tracking of citizens’ movements and interactions in ordinary times, with profound and enduring consequences for human rights and the functioning of our democracies – and the creation of new systems of government power. We believe that it is essential that technologies deployed today to facilitate testing and contact tracing include strict limitations on data collection and storage and how that data can be used (especially in ways that might harm or disadvantage an individual, for example through use in law enforcement). Additionally, robust use of strong encryption technologies (in case data is misplaced or stolen) and strict time limits on permitted uses are needed, so that today’s protection of citizens does not become their scourge once COVID-19 is behind us.

That is why we need clear rules for what can and cannot be done with sensitive information like health or surveillance data during emergency circumstances.

In order to ensure that technology-driven responses to COVID-19 respect human rights and in support of the many recommendations made by the global human rights community, the World Economic Forum’s Global Future Council on Human Rights and the Fourth Industrial Revolution recommends:

1. States recognize international human rights law as the foundation for technology governance

The international human rights law framework provides the normative foundation for understanding the risks and challenges associated with the design, development and deployment of digital technologies. This is even more important to emphasize when governments consider using digital technologies to limit rights and freedoms.

Government-mandated actions that limit human rights and freedoms may only be justified if they are necessary and proportionate to the need. Actions that meet these criteria in the context of COVID-19 emergency measures should be acknowledged and explained to the public. Furthermore, where digital systems are used to inform social distancing policies, we must be transparent about the role they play in helping to decide which individuals or communities get to enjoy certain rights including, the freedom of movement or assembly, or the right to work.

2. COVID-19 responses should draw on international best practices

Guidance for data and tech governance related to COVID-19 use cases should draw on best practices, including the Toronto Declaration – a global civil society response guiding the right to equality in AI; and more recent guidelines, such as Access Now’s recommendations for a digital rights-respecting COVID-19 response and the European Commission’s guidelines for the use of technology and data to combat and exit from the COVID-19 crisis. Organizations should use these frameworks and share their learnings publicly.

Standards will be needed for the collection, use, grading, sharing and analysis of data for COVID-19 response efforts. These should include enforcing clear measures for data minimization to ensure that the collection of personal information is limited to what is relevant or necessary to accomplish the stated purpose; as well as rules regarding the de-identification or anonymization of personal information.

3. Data minimization and purpose limitation

Initiatives led by government agencies or public health administrations and authorities should adhere to the principle of data minimization closely. This principle ensures that entities only collect and process data that is strictly relevant and adequate for public health purposes and research, and that said data is not repurposed for advertising or marketing. Public health authorities should also afford special protection to the sensitive data of children. Similarly, an expiry date must be slated for all surveillance technologies implemented as a response to the emergency based on concrete markers – for example, the end of a crisis (and factors signalling the end of the crisis must be explicit), but also when the intervention is found to be minimally effective, or ineffective such that it doesn’t fulfill the requirements outlined above.

4. Transparency in reporting and tracking

In taking consequential actions to combat the virus – such as restricting movement between cities, mandating “shelter in place” policies, and shutting down national borders – governments can either increase trust in institutions or actively work to dismantle such trust. Transparency in reporting on these actions, how decisions are made and upon what kind of data, and in how cases are being tracked are all critical to preventing panic and increasing trust. In South Korea, which saw a flattening of the curve despite a sudden surge in cases, Foreign Minister Kang also explained that being open with people and securing their trust is vitally important. “The key to our success has been absolute transparency with the public – sharing every detail of how this virus is evolving, how it is spreading and what the government is doing about it, warts and all.”

What is the World Economic Forum doing about the coronavirus outbreak?

According to the Open Data Institute, there are three additional reasons why governments should be open and transparent about the models and data they are employing to determine appropriate responses to the virus. First, people need to understand why governments are making the decisions they are making. Once again, this understanding leads to greater trust between citizens and the government. Second, scientists should be able to scrutinize these models to improve them. Finally, sharing models could help other governments around the world, particularly those with lower capacity, to respond more effectively.

Finally, it is crucial that governments continue to uphold the right to freedom of expression and to support open journalism on the virus.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda Weekly

A weekly update of the most important issues driving the global agenda

You can unsubscribe at any time using the link in our emails. For more details, review our privacy policy.

More on COVID-19See all

Charlotte Edmond

January 8, 2024

Charlotte Edmond

October 11, 2023

Douglas Broom

August 8, 2023

Simon Nicholas Williams

May 9, 2023

Philip Clarke, Jack Pollard and Mara Violato

April 17, 2023