Blockchain can help combat the threat of deepfakes. Here's how

Example of deepfakes: original on the left, altered 'deep fake' on the right. Image: WITNESS Media Lab.

Listen to the article

- Deepfakes pose a threat to all aspects of society through malicious use of artificial intelligence.

- Blockchain technology has the power to restore trust and confidence in the digital ecosystem.

- Multistakeholder action is needed to strengthen digital governance and protect the victims of digital injustices.

The pervasiveness of social media, the emergence of sophisticated digital manipulation tools, and the still outdated, under resourced and limited legislative and judicial systems widen the accountability gap between victims of digital harm and their pathway to digital justice.

Imagine waking up one morning to a swarm of texts and phone calls from your friends, family, and co-workers about a series of intimate images and videos of you that were posted on your social media platforms without your knowledge or consent. None of this content depicting you is real, in fact, they were deepfaked. How would you respond? Who manufactured this content in the first place? How do you get justice for this crime?

Unfortunately, there are no clear answers to any of these questions. As a result, individuals and historically marginalized groups have become most at risk to the threat of deepfakes, non-consensual intimate imagery (colloquially referred to as “revenge pornography”), and data-driven harms as a whole, making addressing this problem more urgent now than ever.

Deepfakes are becoming normalized – that’s a big problem

Deepfakes are synthetic representations created using modern AI (artificial intelligence) to depict speech and actions. The biggest threat that deepfakes pose is that it empowers a culture of impunity wherein bad actors face little to no consequences for the harm that they cause to the victims of non-consensual intimate images. And with new and far more advanced “nudifying” websites that are using deep-learning algorithms to strip women’s clothes off in photos without their consent, perpetrators are given more freedom, incentives, and tools to continue with their harmful behaviour.

This recent development has amassed more than 38 million hits since the start of 2021 and has become one of the most popular deepfake tools ever created. Photos and links from this new website aren’t confined to the dark web, nor has it been delisted from Google’s search engine index. It operates free from any constraints and has since spread across major platforms such as Twitter, Facebook, and Reddit, to name but a few.

Women have been enduring the trauma of deepfakes for years, in fact, of the 85,000 deepfakes circulating online, over 99% are pornographic deepfakes of women. Though women make up about half the world’s population, the ways in which online technologies such as deepfakes and disinformation target them and their stories, are overlooked and left inadequately addressed by law and policymakers.

High-profile cases

Take for example the 2018 case of Rana Ayyub, an investigative journalist who was targeted with a pornographic deepfake video that aimed to supress her. It ended up on millions of mobile phones in India, Ayyub ended up in the hospital with anxiety and heart palpitations, and neither the Indian government nor social media networks acted until special rapporteurs from the United Nations intervened and called for Ayyub’s protection.

I used to be very opinionated…Now I’m much more cautious about what I post online… I’m someone who is very outspoken, so to go from that to this person has been a big change.

”There is also the 2017 case of Svitlana Zalishchuk, then a member of the Ukraine Parliament, whose public image had been tarnished by “cheap fakes” – poorly edited photos that sexualized, demeaned, and attempted to drive her out of public life. It is almost certain the campaign against Zalishchuk was a Kremlin operation.

There are numerous additional cases like these that multiply in number as the ease and accessibility of these emerging technologies develops unchecked. Just last year, it was discovered that users were using a Telegram channel to pay $1.25 to generate nude images of more than 680,000 women, mostly in Eastern Europe and Russia, without their knowledge or consent.

In a less high-profile case, but no less significant, a American YouTuber and ASMR4 artist, Gibi, has been repeatedly targeted by deepfakes and online harassment. This issue became so bad that she had to change her name, move out of her home and be extremely vigilant when revealing any potentially identifying information about herself. She has since discovered several online businesses in which others are profiting from selling her image without her consent in deepfakes and other fake, often pornographic, material. She has even been approached by a company offering to remove the deepfakes of her, at $700 a video.

I can't think of a single organization that is equipped to deal with this, lawmakers and different governments just let it go. I'd like to see perpetrators brought to justice and know that it's a crime.

”Given that the digital world is an extension of our physical reality, what is punishable in the physical world should also be punishable in the virtual world. However, when it comes to prosecuting crimes committed through digital means, one of the biggest obstacles to their punishment is the lack of adaptation of traditional crimes to new circumstances, and the difficulty in regard to uncovering the perpetrator(s) behind the digital crime. The potential of blockchain technology is being researched and developed in helping to address this latter issue.

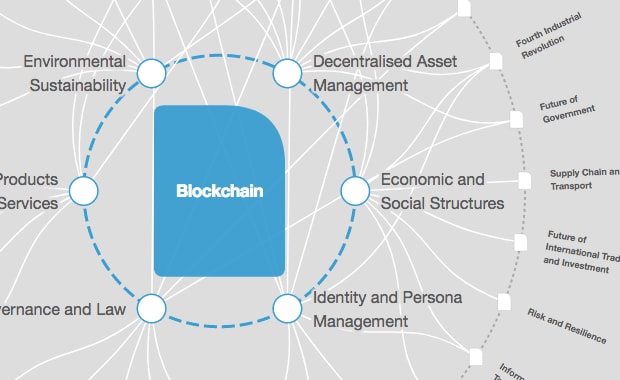

How is the World Economic Forum promoting the responsible use of blockchain?

How can blockchain help?

Blockchain systems use a decentralized, immutable ledger to record information in a way that’s continuously verified and re-verified by every entity that uses it, making it nearly impossible to change information after it’s been created. One of the most well-known applications of blockchain is to manage the transfer of cryptocurrencies like Bitcoin. But blockchain’s ability to provide decentralized validation of authenticity and a clear chain of custody makes it potentially effective as a tool to track and verify not just financial resources, but all sorts of forms of content.

To understand the potential of blockchain in combatting deepfakes, understanding the basics of hashing algorithms, cryptographic signatures (the means of altering data from a readable form (also known as plaintext) to a protected form (also known as ciphertext), and back to the readable form) and blockchain timestamping (a secure way of tracking the creation and modification time of a document) is important.

While cryptographic algorithms and hashing is not unique to blockchain, these particular features of the technology are made more powerful once combined with other features of blockchain technology – like its immutable nature.

Think of a cryptographic hash as a unique fingerprint for the specific content or file. For example, the image below illustrates how information passing through a cryptographic hashing algorithm takes a text input of any size and creates an output of fixed length. This output is called a hash. Anyone else with similar data can use that algorithm to generate the same hash and comparing hashes can tell you that you share the same information. This can be useful in proving that a piece of text, file, or content has not been altered over time.

Add to this the immutability feature that blockchain brings – the ability for a record on blockchain to stay permanent and irreversible – and the hash or “fingerprint" cannot be modified and becomes a tamper-proof reference of the digital content at a specific point in time. If we apply this to deepfake identification, it means that on a blockchain network, hashes can be useful in proving that images, videos, or content in general have not been altered over time. Blockchain technology, by enabling provenance and traceability of digital content, hence can help to create an audit trail for digital content.

Together with conventional technologies such as digital signatures and standard encryption to ensure nonrepudiation, timestamping on the blockchain can serve to inform on items useful in identifying deepfakes, such as to confirm the date of the item’s origin or to show that the content has been in someone’s possession at a particular time. Such timestamping is already in use in specific applications today across a variety of industries, including for provenance in supply chain goods, notaries verifying signatures in contracts and others.

For example, the photo and video authentication company Truepic notarizes and timestamps content on the Bitcoin and Ethereum blockchains to establish a chain of custody from capture to storage. Adding blockchain features, like immutability, provides the added immutable assurance. This provides a verifiable history of digital content shared. It can help the consumers of digital content to verify the content’s background.

Why it's not a silver bullet

It is worth pointing out that any such benefits blockchain brings are always design and implementation specific and revolve around several architectural choices. Timestamping can help with the proof of existence of a document but does not prove ownership (which becomes subject to a number of considerations including, for instance, the governing intellectual property agreements).

There are limits to what blockchain can do in combatting deepfakes and uncovering the perpetrators behind these harms. Blockchain combined with other emerging technologies – such as AI – can indeed strengthen its ability in deepfake identification. However, technological problems cannot be resolved exclusively with technological solutions.

In order for blockchain to effectively help, cohesive partnerships within international communities of governmental, corporate, civil society and technical leaders committed to shaping the governance of digital content creation and consumption is paramount. Complementary policy, civic engagement strategies and cultural as well as social change are also critical to reduce the reach and scale of deepfake technology. By providing greater transparency into the lifecycle of content and bridging the accountability gap for victims of digital injustices, blockchain could offer a potential mechanism to restore trust in our digital ecosystem.

The World Economic Forum have published a white paper, Pathways to Digital Justice, which includes a resource guide for victims of deepfakes.

The World Economic Forum’s Global Future Council on Data Policy is leading a multistakeholder initiative aimed at exploring these issues, Pathways to Digital Justice, in collaboration with the Global Future Council on Media, Entertainment and Sport and the Global Future Council on AI for Humanity. To learn more, contact Evîn Cheikosman at evin.cheikosman@weforum.org.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Blockchain

Related topics:

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on CybersecuritySee all

Julie Iskow and Kim Huffman

November 11, 2025