To beat deepfakes, we need to prove what is real. Here's how.

Deepfake tech on show at Davos this year Image: REUTERS/Denis Balibouse

- The growth of image manipulation techniques is eroding both trust and informed decision-making.

- Although it is impossible to ID and prove fakes in real time, we can ascertain which images are truthful.

- Software already exists that can verify images' provenance - the next step will be hardware-based.

Today, the world captures over 1.2 trillion digital images and videos annually - a figure that increases by about 10% each year. Around 85% of those images are captured using a smartphone, a device carried by over 2.7 billion people around the world.

But as image capture rates increase, so does the rate of image manipulation. In recent years the level and speed of audio visual (AV) manipulation has surprised even the most seasoned experts. The advent of generative adversarial networks (GANs) - or 'deepfakes' - has captured the majority of headlines because of their ability to completely undermine any confidence in visual truth.

And even if deepfakes never proliferate in the public domain, the world has nevertheless been upended by 'cheapfakes' - a term that refers to more rudimentary image manipulation methods such as photoshopping, rebroadcasting, speeding and slowing video, and other relatively unsophisticated techniques. Cheapfakes have already been the main tool in the proliferation of disinformation and online fraud, which have had significant impacts on businesses and society.

The growth of image manipulation has made it more difficult to make sound decisions based on images and videos - something businesses and individuals are doing at an increasing rate. This includes personal decisions ranging from purchases on peer-to-peer marketplaces, meeting people when online dating, or voting; and business decisions, like fulfilling an insurance claim or executing a loan. Even globally important decisions are impacted, such as the international response to images and videos displaying atrocities or egregious violence in conflict zones or non-permissive areas, and much more.

Each of these very different use cases highlights two contradictory trends; we rely on images and videos more than ever before, but we trust them less than we ever have. This is a significant gap that is growing by the day and has forced government and technologists to invest in image-detection technology.

Unfortunately, there is no sustainable way to detect fake images in real time and at scale. This sobering fact will likely not change anytime soon.

There are several reasons for this. First, almost all metadata is lost, stripped or altered as an image travels through the internet. By the time that image hits a detection system, it will be impossible to reproduce lost metadata - and therefore details like the original date, time, and location of an image will likely remain unknown.

What is the World Economic Forum doing to improve digital intelligence in children?

Second, almost all digital images are instantly compressed and resized once they are shared across the internet; while some manipulations are benign (such as recompression), others may be significant and intended to deceive the content consumer. In either case, the recompression and resizing of images as they are uploaded and transmitted makes it difficult, if not impossible, to detect pixel-level manipulations due to the loss of fidelity in the actual photo.

Third, when an automated or machine-learning-based detection technique is identified and democratized, bad actors will quickly identify a workaround in order to remain undetectable.

What makes detection even more difficult is social media, which disseminates content - fake or real - in seconds. Those intent on deceiving can inject fake content onto social media platforms instantly. Even successful debunking would likely be too late to stop the fake content from spreading, and cognitive dissonance and bias would more greatly influence consumers' decisions.

So if detection will not work, how do we arm people, businesses and the international community with the tools to make better decisions? Through images' provenance. If the world cannot prove what is fake, then it must prove what is real.

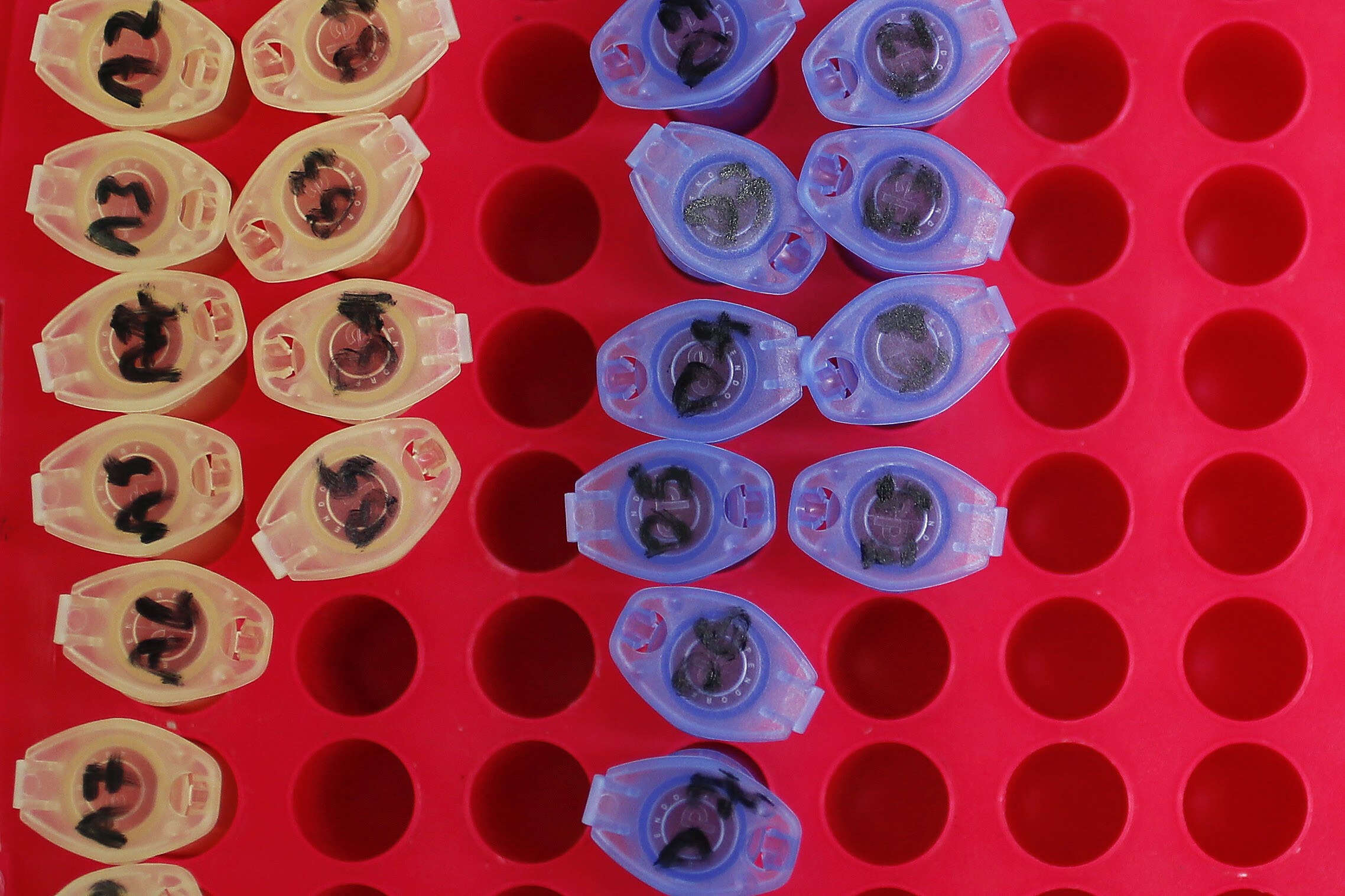

Today, technology does exist - such as Controlled Capture, software developed by my company, Truepic - that is able to both establish the provenance of images and to verify critical metadata at the point of capture. This is possible thanks to advances in smartphone tech, cellular networks, computer vision and blockchain. However, to truly restore trust in images on a global level, the use of verified imagery will need to scale beyond software to hardware.

To achieve this ambitious goal, image veracity technology will need to be embedded into the chipsets that power smartphones. Truepic is working with Qualcomm Technologies, the largest maker of smartphone chipsets, to demonstrate the viability of this approach. Once complete, this integration would allow smartphone makers to include a 'verified' mode to each phone's native camera app - thus putting verified image technology into the hands of hundreds of millions of users. The end result will be cryptographically-signed images with verified provenance, empowering decision-makers to make smart choices on a personal, business or global scale. This is the future of decision-making in the era of disinformation and deepfakes.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Digital Communications

Related topics:

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.