The book character gender gap: AI finds more men in fiction than women

The ‘character gender gap' narrowed when the books were written by women. Image: Unsplash/Radu Marcusu

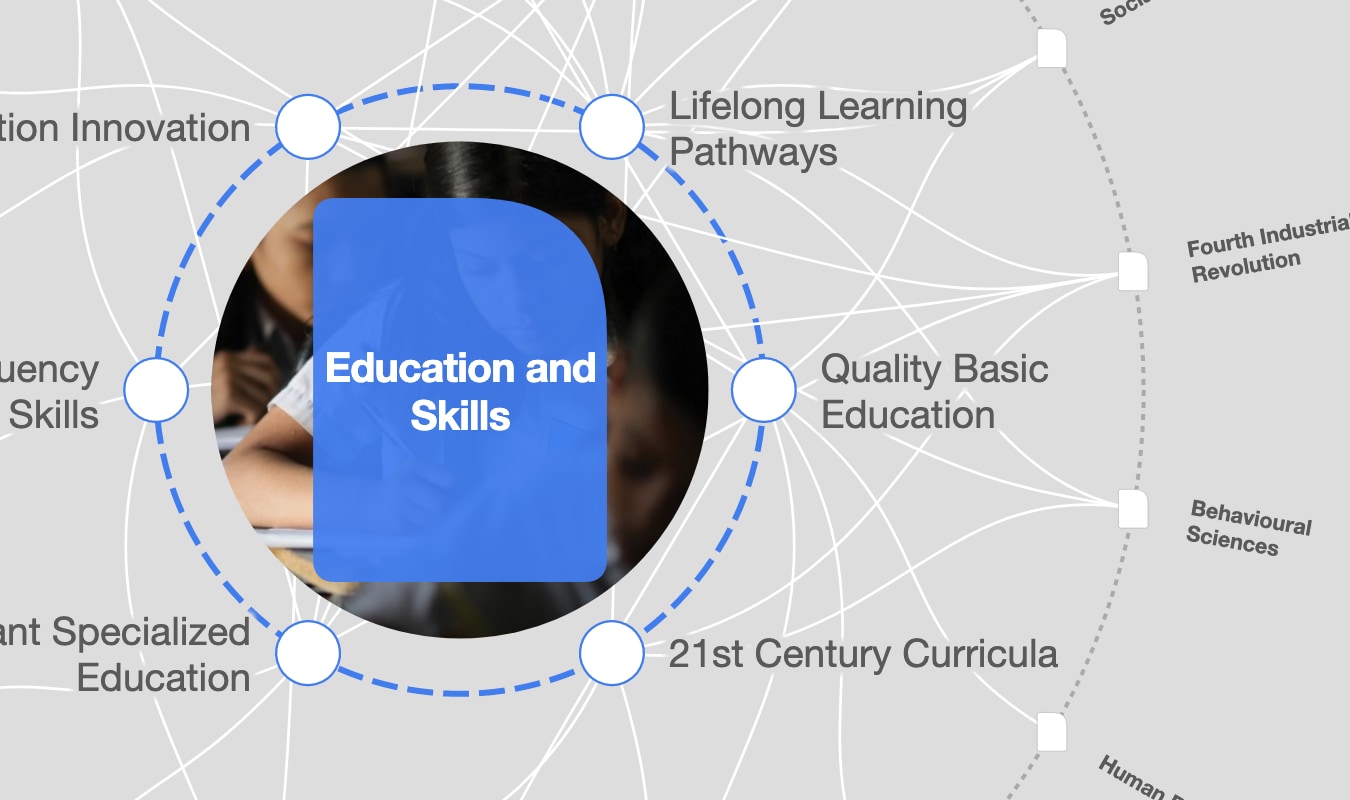

Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:

Education, Gender and Work

Listen to the article

- Researchers have found men outnumber women 4:1 in works of literature.

- They used natural language processing, a branch of artificial intelligence, to analyze mentions of gender pronouns.

- The cumulative effect of such unconscious gender bias can contribute to the gender pay gap and fewer women being in leadership positions.

“Reader, I married him.” So says Jane Eyre, the eponymous heroine of Charlotte Brontë’s classic 1847 romance - a line so famous because of the hurdles she must jump to get to that point.

But some 175 years and all the progress towards gender equality later, there are four times as many Edward Fairfax Rochesters as there are Jane Eyres, according to artificial intelligence (AI).

It also offered an insight into the adjectives used to describe women - and for now, let’s just say Brontë would not have approved.

Gender bias in books

Researchers from the University of Southern California’s (USC) Viterbi School of Engineering used a machine learning tool to analyze 3,000 books digitized on Project Gutenberg, including novels, short stories and poetry, ranging from adventure and science fiction to mystery and romance.

Mayank Kejriwal, research lead at USC’s Information Sciences Institute (ISI), is an expert in natural language processing (NLP) and was inspired by work on implicit gender biases.

Together with co-author Akarsh Nagaraj, a machine learning engineer at Meta, Kejriwal used named entity recognition (NER), an NLP method used to extract gender-specific characters.

“One of the ways we define this is through looking at how many female pronouns are in a book compared to male pronouns,” says Kejriwal. They also defined how many female characters are the main characters in the book, to work out whether male characters were central to the story.

What's the World Economic Forum doing about the gender gap?

Mind the character gender gap

While other research has found women read more than men, mentions of men outnumbered women 4:1 in the USC study. Tellingly, this ‘character gender gap' narrowed when the books were written by women.

“It clearly showed us that women in those times would represent themselves much more than a male writer would,” says Nagaraj.

The NLP technology also allowed researchers to find adjective associations with gender-specific characters, which they said deepened their understanding of bias and its pervasiveness in society.

“Even with misattributions, the words associated with women were adjectives like ‘weak’, ‘amiable’, ‘pretty’, and sometimes ‘stupid’,” says Nagaraj. “For male characters, the words describing them included ‘leadership’, ‘power’, ‘strength’, and ‘politics’.”

Kejriwal hopes the study will highlight the importance of interdisciplinary research and, more specifically, using AI technology to turn a spotlight on social issues and inequalities.

Why fair representation in literature matters

As Jessica Nordell told the World Economic Forum in an interview for her book The End of Bias: “We live in a culture and absorb information from that culture about which categories are relevant, which categories are salient and what those categories mean. And we absorb a lot of associations and stereotypes and kinds of cultural knowledge about those categories.”

“Gender bias is very real, and when we see females four times less in literature, it has a subliminal impact on people consuming the culture,” says Kejriwal. “We quantitatively revealed an indirect way in which bias persists in culture.”

Over time the cumulative effect of unconscious gender bias can add up, says Nordell - contributing to the gender pay gap and fewer women being in leadership positions.

It will take 135.6 years to close the gender gap worldwide, according to the Forum’s Global Gender Report 2021, which doesn’t fully reflect the impact of the COVID-19 pandemic.

It found “a persistent lack of women in leadership positions”, with women representing just 27% of all manager positions.

Seeing women represented equally in literature could be just one way to reduce unconscious bias and help close the gender gap.

“Our study shows us that the real world is complex but there are benefits to all different groups in our society participating in the cultural discourse,” notes Kejriwal. “When we do that, there tends to be a more realistic view of society.”

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Related topics:

The Agenda Weekly

A weekly update of the most important issues driving the global agenda

You can unsubscribe at any time using the link in our emails. For more details, review our privacy policy.

More on Arts and CultureSee all

Joseph Fowler and Amilcar Vargas

April 18, 2024

Robin Pomeroy and Sophia Akram

April 8, 2024

Faisal Kazim

April 3, 2024

Robin Pomeroy and Linda Lacina

March 28, 2024