How can we design AI agents for a world of many voices?

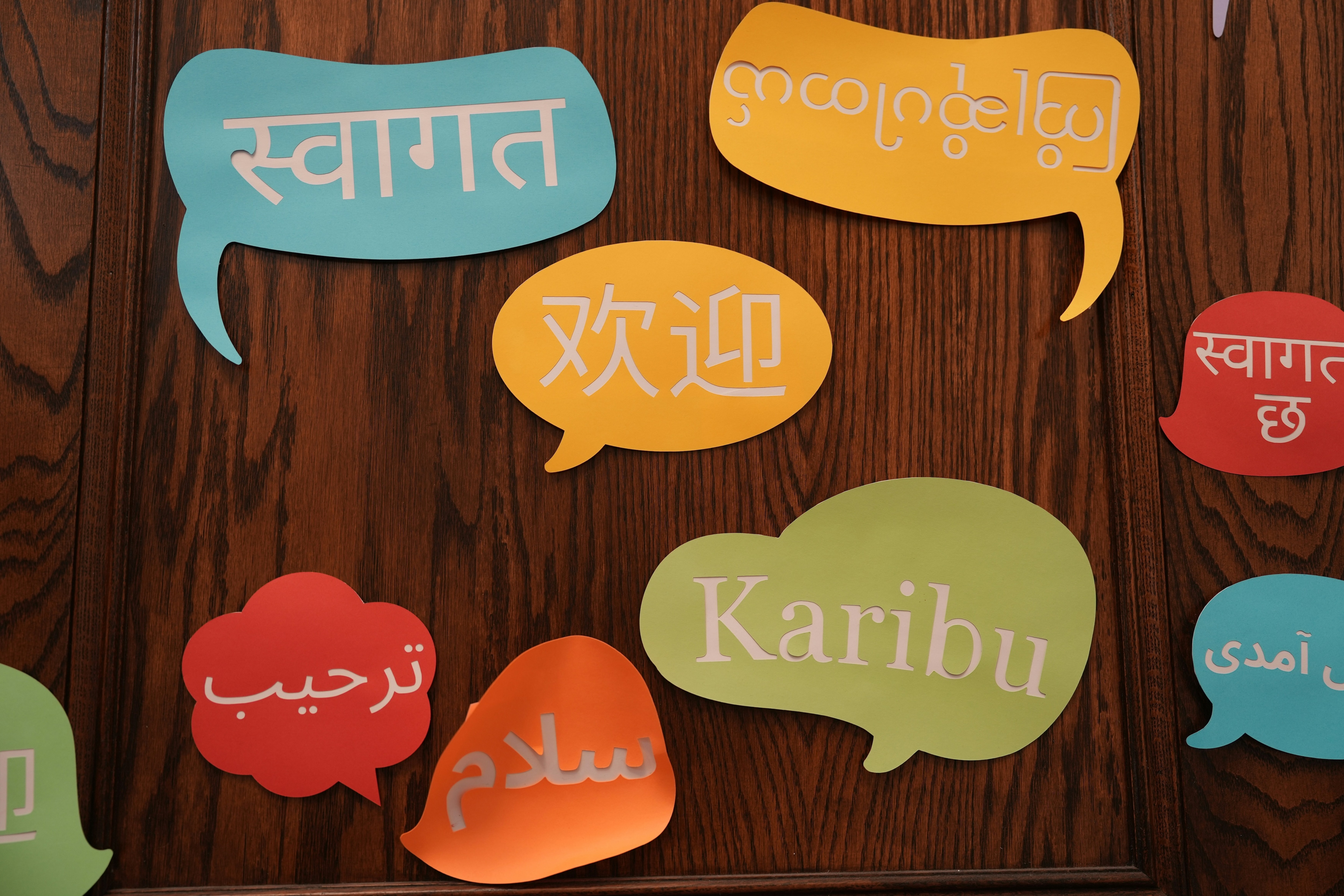

AI agents must embrace diverse languages to avoid reinforcing inequalities. Image: Unsplash/zhendong wang

- When AI agents ignore linguistic diversity or prioritize dominant languages, they risk erasing identity and excluding communities, reinforcing existing social and economic inequalities.

- Most AI models are trained primarily on English and formal language, causing them to misunderstand dialects, accents, code-switching, and emotional nuance.

- The World Economic Forum’s 2026 Annual Meeting in Davos, Switzerland, will underscore the growing importance of open, impartial dialogue under the theme, "The Spirit of Dialogue."

We’re living through an era of widening divides. According to the United Nations, two-thirds of the global population now live in countries where inequality is rising and trust in organizations is falling. In 2023, the richest 1% owned nearly half the world’s wealth. Meanwhile, almost 40% of adults held less than 1%.

The gap between who benefits and who doesn’t is growing across borders, sectors and systems. But inequality isn’t just economic. It’s also linguistic.

More than 6 billion people (nearly 80% of the world’s population) speak a language other than English as their native or second language – a reality that shapes who gets access to healthcare, education, services and opportunity.

Every conversation carries profound cultural weight.

”And as the world becomes more interconnected – and more digitally mediated – language becomes a silent fault line in how people are included or excluded.

That’s the challenge AI agents now inherit. As agents become the first point of contact between people and organizations, they carry a serious responsibility: the weight of helping people feel seen and heard.

The systems we build today will shape how we connect tomorrow. So the question is: how do we design them to understand each one of us?

Language is identity

Every conversation carries profound cultural weight. The way people speak – for example, the language they choose, metaphors they select and the rhythm of their speech – reflects more than just linguistic quirks. They reveal deep-rooted history, social context and power dynamics.

You can see this clearly in New Zealand, where the Māori language is embedded in the land. Names for rivers, hills and forests map ancestral journeys and tribal relationships, living markers of memory and place. And because people still use Māori in schools, media, and public life, those meanings continue to evolve.

Strip that language away and you don’t just erase meaning; you erase belonging.

Research backs this up. Studies consistently show that language acts as a core symbol of identity. It shapes how people see themselves, how they connect to others and how cultures endure.

One study on Chinese transnational youth, for example, found that maintaining their native language, even while learning others, helped preserve emotional well-being and a sense of social belonging. In other words, language keeps identity intact, even in flux.

But that continuity is fading. Globalization continues to erode linguistic diversity and with it, the cultures those languages carry. UNESCO’s 2025 report calls for multilingual education to protect that diversity, emphasizing the need for flexible, culturally responsive language policies.

Because when we ignore how language works, we risk alienating communities and perpetuating the widening gap we’re trying to close. That’s the tension AI technology walks into.

Context is key for equitable AI

Too often, organizations treat language like a clean input-output problem when designing AI agents: parse the words, map the intent, generate a response. That might work for simple tasks. But in complex, high-stakes domains such as healthcare or financial services, flattening language can flatten subtleties.

And when context gets lost, equity goes with it.

In fact, studies show literal word-to-word translation can lose up to 47% contextual meaning and more than half of the emotional nuance. And this challenge runs deeper than mistranslation.

Out of the world’s 7,000 languages, most large language models (LLMs) are still trained predominantly in English and that skews how systems understand and respond to conversations. Those biases can lead to harm.

Ultimately, when we build with one voice in mind, we reinforce inequity

”You can see it in something as common as a support call.

Let’s say a person in Brazil reaches out to a financial services contact centre after receiving an eviction notice. She’s panicked, using regional Portuguese expressions and informal phrasing to explain her situation.

However, the AI agent, trained mostly on English and formal European Portuguese, doesn’t register the urgency. It treats her plea as a routine inquiry, offering a standard response and placing her case in the general queue. It reflects a bias built into the system’s assumptions about language, identity and who counts.

AI agents currently lack nuance

The consequences are even more serious in critical fields such as healthcare, where there's no room for error. A 2024 study on automatic speech recognition in clinical settings found that systems consistently underperformed for speakers with non-native accents and dialects.

This included tonal languages such as Vietnamese and varieties such as African American vernacular English. As a result, the transcription errors could lead to misdiagnoses, delayed care and other serious consequences, especially for patients already navigating systemic barriers.

These breakdowns reveal something fundamental: most AI agents weren’t built to reflect how people actually speak. These systems struggle with code-switching, dialects and informal phrasing because organizations didn’t build them for that kind of complexity. And without that, AI can’t serve the very people it’s meant to support.

To build better AI agents, meet people where they are.

Bake in cross-cultural norms

That said, AI has enormous potential to support cross-cultural collaboration.

Done right, AI agents can serve as bridges: translating across languages, holding institutional memory and enabling systems to respond faster, more fairly and with more empathy. But only if we build them with those goals in mind.

That starts with cultural intelligence because language isn’t neutral. People bring their full selves to a conversation: identity, history, emotion and expectation. And in multilingual or multicultural contexts, those layers multiply.

To build AI agents that actually work across cultures, you have to design for variation from the start. That means adapting to different norms across languages, regions and use cases, without defaulting to one-size-fits-all behaviour.

Maybe that looks like shifting tone in a customer support conversation in Japan, or recognizing indirect phrasing as politeness in Nigeria. That’s the difference between functional and respectful interaction.

To get there, you need to:

- Invest in low-resource languages.

- Partner with local linguistic communities.

- Train systems on authentic, representative data.

But building is only part of the work. We also need to rethink how we evaluate AI agents. Standard metrics such as word error rate or intent accuracy don't capture the full picture of real-world communication. We need better assessments of whether an AI agent understands emotional tone, context and conversational rhythm.

- Are its responses appropriate, not just correct?

- Can it distinguish urgency from frustration?

- Does it know when to escalate or when to stay silent?

Cultural intelligence requires collaboration

Collaboration is not just important between engineers and linguists but also with the communities these systems aim to serve. That means building feedback loops into the design process. Because when people help shape the technology they use, trust follows.

Ultimately, when we build with one voice in mind, we reinforce inequity. However, when we build for many, we make inclusion possible so that AI agents become bridges, not barriers.

How the Forum helps leaders make sense of AI and collaborate on responsible innovation

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Artificial Intelligence

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Artificial IntelligenceSee all

Jeremy Jurgens

February 27, 2026