Better conversations can help reimagine AI regulation. These steps can help.

Image: Photo by You X Ventures on Unsplash

Stay up to date:

Emerging Technologies

- Conversation is key to building trust regarding emerging technologies such as AI.

- Carefully-planned engagements, ones that define what should be accomplished and how a range of people can contribute, can build trust in the long term.

AI and algorithms are everywhere, underpinning many parts of our daily lives, improving systems and increasing productivity. They also carry risk, however, potentially introducing new biases or worsening old ones, blocking candidates from jobs and even misidentifying crime suspects.

As AI is ubiquitous, governments and businesses leverage social licence to explore new uses. Through social licence, communities agree that governments, agencies, or companies are considered trustworthy enough to use AI in ways that may have risks.

Having this trust means people believe that if something does go wrong, it will be quickly identified and fixed before there is harm caused. Such trust can be built with conversations and robust engagement between the public, technologists and policy makers.

Successful conversations on AI or other emerging technologies are multi-stage processes, involving different players at different intervals to play important roles. As part of the World Economic Forum’s Reimagining Regulation for the Age of AI project, the project team and community developed a range of best practices and steps for how to design a successful conversation on AI. For better, more robust conversations, these tips can help.

What's the World Economic Forum doing about diversity, equity and inclusion?

What’s needed for powerful engagements

Strong engagement forges trust and will power valuable conversations to help different parties fully understand each other’s needs and views. Good engagement takes several factors into account, including:

- Influence. Strong engagement operates with the understanding that participants have a mandate to drive change and an influence on the policymaking and the decisions ultimately made. Participants may have different roles, including informing, consulting, involving, collaborating or empowering. It’s important designers are clear about the role they want participants to play and the level of influence they’ll have. Without that clarity, trust will be lost.

- Impact. Engagement drives a strong “before and after,” where discussions are linked to outcomes requested by governments, businesses and the people. Knowing their direct role in shaping change strengthens trust further and ensures people are more likely to engage again in future conversations.

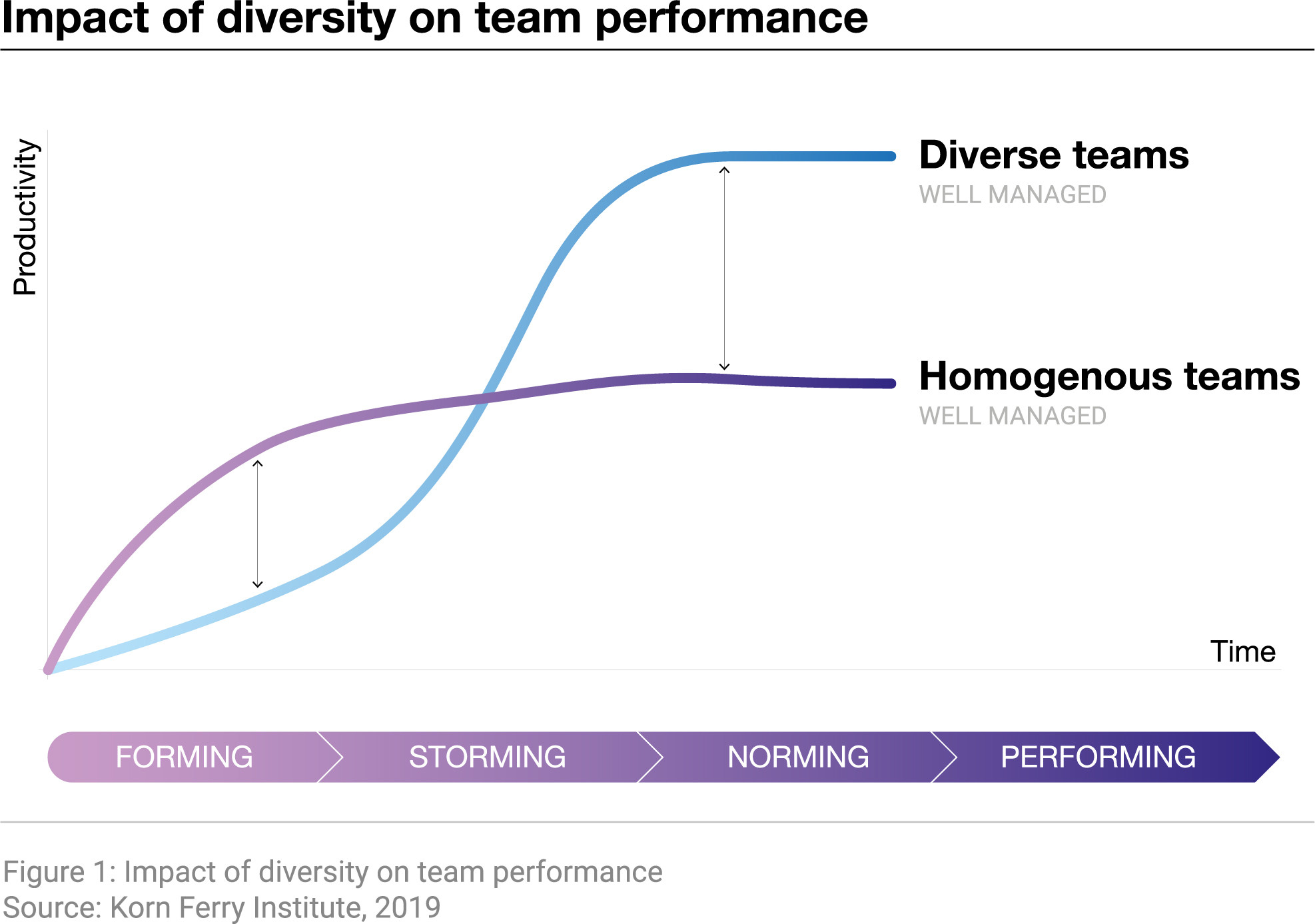

- Diversity. Successful conversations draw from a range of voices. To this end, structure is key. As you consider your outreach, consider the channels of engagement, allowing participants to choose from face-to-face meetings, written feedback, discussion groups or even online forums to ensure a range of voices can be heard.

- Impartiality. To ensure that engagements are not biased or influenced by a subset of views, take stock of those participating and note what voices might be missing and which might be dominant. Relaying comments back to communities to get their reactions can also further shape discussions and add balance.

- Representative. Strong, engaged conversations are representative of their communities and countries and scaled to ensure small groups can still be heard.

How to run a successful engagement

Planning can create the right spaces for strong engagements. To make the most out of these opportunities, the following steps should be taken:

1. Define. To start, the design team must identify core elements such as principles, legal context and societal norms underpinning the current use of AI. These current rules and conventions outline which boundaries, safeguards and protections are already in place. Communicating these elements to participants helps focus discussions by letting the public know which concerns are already covered by regulation and how participants can contribute to the creation of new safeguards.

2. Discover. In this stage, the design team researches the issue and the current context, identifying how the engagement could fuel progress. This initial stage could take place with a small-scale workshop involving a few key stakeholders. This stage allows the designers to crystallise the issue at hand, the aspects where consultation is needed, and any goals or outcomes. Participants will trust the process more if they can see the pathway ahead, know they can contribute to the desired end goal, and understand they are helping to design some of the steps to reach the end goal.

3. Decide. Here, the design team identifies who should be included in the larger engagement. This might include stakeholders as well as members of the community with particular concerns about, or knowledge about, bias and algorithms. Hard-to-reach audiences need to be identified and decisions made on how best to reach these participants. Designers will also decide what level of influence the participants will have and the extent to which views will be listened to, respected, and used in the next stages. Trust will be lost if people participate and then find their views have been ignored.

4. Design. In this stage, the team takes what it has learned about the issue it is covering, and the people involved to decide the best channels for engagement and dialogue. The shape of the engagement will depend on factors such as the intended participant list, the level of involvement being sought by the participants, and the nature of the feedback wanted. So, for example, a small-scale engagement with a specific group seeking views on how personal data is to be used, with the aim being to feed into a piece of legislation, will require different materials, meetings and level of discussion than will a general nationwide conversation on how human rights sit within digital technologies, with the aim of raising awareness about digital rights.

5. Analyse. Once the engagement has occurred and information gathered, the results need to be analysed and synthesised by the design team. The design team needs to look at the inputs and adjust for bias, vested interests, monopoly of views and strong voices. The findings will need to be presented to key stakeholders and other key audiences, including the participants. These findings will include recommendations on how the engagement research will be used (that is, it will inform or change the decision?) and the next steps.

6. Review. Finally, the whole process should be reviewed and evaluated, either by the design team or by an independent group. This will help identify what went well and what didn’t, giving valuable insights for future engagements. The review can be done in many ways (For instance, interviews or surveys with the participants and design team is a useful way to review the process from both sides). A key part of this process is providing honest feedback to participants on what happened with their input and how it has contributed to the next steps.

"Through trust, it’s possible to yield the valuable insights and material that make the ultimate decisions stronger."

”Open and honest engagement requires courage on both sides. Conversations on new technologies that have the potential to disrupt communities and economies are difficult. A carefully planned engagement helps signal transparency and humility to participants and the fact that one party has all the answers.

Engagements will inevitably result in differing views or roadblocks. The steps above can’t prevent disagreements, but if the designers are open and honest from the start, trust is built early and it’s possible to work through any disagreements. Through trust, it’s possible to yield the valuable insights and material that make the ultimate decisions stronger.

An involved and active community that trusts enough to engage is vital to a functioning democracy. Gaining and maintaining this trust requires an ongoing commitment but one that is worthwhile as community support and input makes for better, stronger policy.

Accept our marketing cookies to access this content.

These cookies are currently disabled in your browser.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Emerging TechnologiesSee all

Gareth Francis

July 14, 2025

David Elliott

July 14, 2025

Babak Hodjat

July 11, 2025

Markus Kirchschlager and Benedikt Gieger

July 10, 2025

Victoria Masterson, Ian Shine and Madeleine North

July 10, 2025

Gayle Markovitz and Beatrice Di Caro

July 9, 2025