The neurosecurity stack: How we balance neurotechnology’s opportunity with security

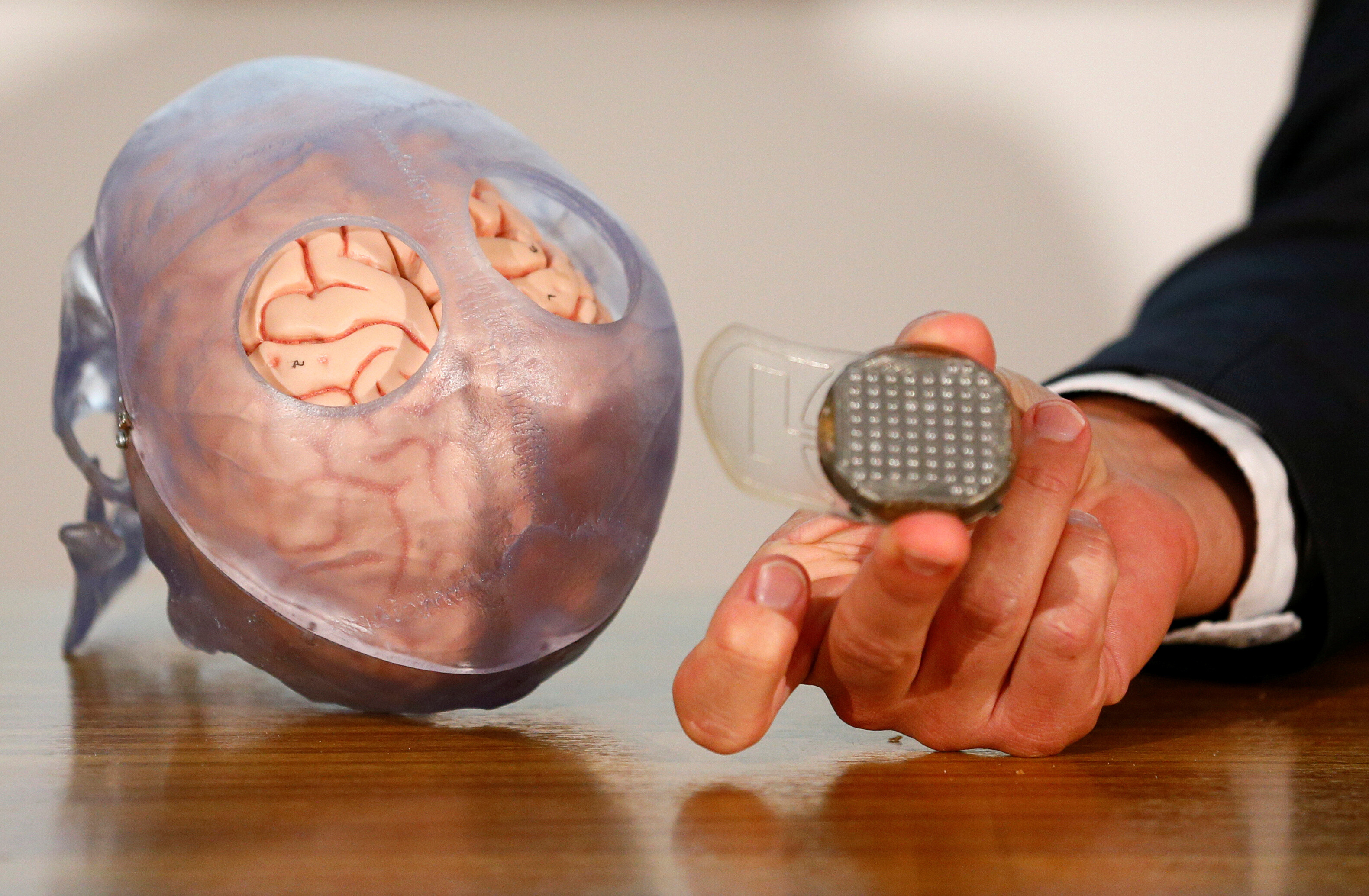

Neurotechnology data is sensitive requiring robust safeguards. Image: REUTERS/Emmanuel Foudrot

Hoda Al Khzaimi

Associate Vice-Provost for Research Translation and Entrepreneurship, New York University Abu Dhabi- Neurotechnology is advancing rapidly, from medical implants to consumer brain-computer interfaces, bringing immense promise but also unprecedented risks.

- Neural data is uniquely sensitive, revealing thought and emotion, making security and governance essential.

- A holistic, layered security and governance framework is necessary to protect trust, safeguard identity and unlock the transformative potential of neurotechnology.

Neurotechnology is no longer confined to research labs or science fiction. From brain-computer interfaces restoring movement in people with paralysis to consumer devices promising enhanced focus or stress reduction, the field is advancing at a remarkable pace.

The global neurotechnology market is projected to surpass $24 billion by 2030, growing at double-digit rates. With this expansion, there is opportunity but also an urgent need to address new risks.

As Tom Oxley, CEO of Synchron and co-chair of the World Economic Forum’s Global Future Council on Neurotechnology, has emphasized:

“If we get the governance and security right, neurotechnology could become one of the most transformative tools for human progress, restoring lost functions, expanding learning and creating entirely new ways to connect and collaborate.”

This dual reality defines the field today: extraordinary promise but contingent on security, resilience, ethical guardrails and trust.

Why neurodata is different

Unlike passwords, fingerprints or DNA, neural data exposes the private architecture of human thought. Breakthroughs now allow brain implants to decode silent speech and AI models to reconstruct visual experiences.

Such data reveals emotions, intentions and decisions. Unlike a password, it cannot be reset once compromised. Security risks are already visible. Over 70% of commercial neurotechnology devices contain exploitable vulnerabilities.

Demonstrations have extracted private information or manipulated neural signals. What once seemed speculative – brainjacking, cognitive manipulation and neuro-surveillance – is fast becoming feasible as AI-driven decoding advances.

However, no global framework exists for protecting neural data. While medical implants are regulated, consumer neurotech, wellness headsets, gaming brain-computer interfaces (BCIs) and productivity wearables remain in a regulatory grey zone, despite recent steps such as California classifying neural data as “sensitive personal information.”

Without global guardrails, we risk advancing neurotechnology without safeguarding the very essence of identity.

The expanding neurotechnology landscape

The neurotechnology ecosystem now spans healthcare, consumer markets, defence and education, offering breakthroughs but also new risks. Medical implants such as deep-brain stimulators restore function for patients, yet have been shown to be vulnerable to wireless exploitation if safeguards fail.

Consumer BCIs for wellness, gaming and productivity often rely on Bluetooth or Wi-Fi, exposing them to spoofing and hijacking attacks. AI-driven neural decoders can now reconstruct speech, images and emotional states from brain signals but are susceptible to inversion and adversarial attacks that could falsify outputs.

The coming decade will decide whether it becomes a trusted human-machine partnership or a new frontier of vulnerability.

”Cloud-linked platforms amplify these risks, exposing neural data to ransomware, supply chain compromise and cross-border surveillance. Emerging fields, from quantum communication for secure transfer to AI-enhanced decoding and cloud-edge hybrids, promise new capabilities but still lack robust security and governance frameworks.

The lesson is clear: neurotechnology’s promise cannot be separated from its vulnerabilities and protection must extend across the entire stack, from chip to cloud, from implants to wearables.

A holistic, layered approach

Securing neurotechnology cannot be achieved through a single safeguard; it requires a layered ecosystem of protections that combines technical resilience with effective governance. At its core is neurodata governance, treating brain data as critical infrastructure with transparency, informed consent and strict minimization.

A specialized security ecosystem must protect devices, AI models and cloud pipelines through secure protocols, monitoring and anomaly detection. Signal security should reach the brain-signal level, using lightweight encryption, authentication and digital signatures to prevent spoofing or injection, validated through red-teaming.

AI security requires adversarial testing, bias audits, explainability and cryptographic watermarking for model integrity. Cloud and edge systems must favour local processing and secure cloud dependencies with symmetric encryption, post-quantum cryptography and homomorphic encryption for privacy-preserving analytics.

Supply chain integrity relies on hardware roots of trust, zero-knowledge proofs and blockchain-backed traceability to prevent tampered circuits. These safeguards must be anchored in governance frameworks that extend beyond medical device rules to consumer neurotech, dual-use risks and cross-border data.

Finally, a trust taxonomy should define what “trustworthy neurotechnology” means – embedding resilience, ethics and cryptographic accountability.

Towards responsible innovation

The path forward must frame neurotechnology not as a source of fear but as an opportunity anchored in responsibility. Securing neural systems is about more than preventing misuse; it is about enabling their safe, inclusive and transformative potential.

By embedding resilience from chip to policy and by building governance architectures that align industry, governments and civil society, we can ensure neurotechnology strengthens human dignity rather than erodes it.

The coming decade will decide whether it becomes a trusted human-machine partnership or a new frontier of vulnerability. The responsibility is collective. If approached wisely, neurotechnology can drive innovation while safeguarding the very core of human identity.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Cybersecurity

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on CybersecuritySee all

Deya Innab

February 16, 2026