Tackling COVID-19 requires better governance of AI and other frontier technologies – here’s why

Image: CDC

- The COVID-19 crisis puts new and particular demands on government and corporate leadership.

- Companies and agencies using artificial intelligence/machine learning tools will need to develop real-world governance practices to maintain consumer confidence in the medium and long term.

The COVID-19 crisis is shining a bright light on the rapid development and adoption of artificial intelligence/machine learning (AI/ML) for impact real-life, real-time settings. Virus or no virus, advances in technology (along with profound demographic shifts worldwide and climate change) guarantee the coming decade will be one of transformation and dislocation. However, already we can see that AI/ML is central to the COVID-19 picture – and when put to use, these technologies require concerted assessment, thoughtful governance and careful handling by the people using them. This crisis then puts new and particular demands on government and corporate leadership.

Our most effective, immediate and distributed tools for limiting the spread of COVID-19 basically define “low-tech”: keeping physical distance, staying home, washing hands, and staying physically fit, to the extent possible. Our grandmothers would be proud. But the companion to these measures, and what largely will determine how well (and how quickly) we emerge from this crisis, are tools and techniques driven by AI/ML – with applications so new and fantastic that we don’t yet know their names, let alone how to assess or to manage their promise and risk, or to integrate their outputs into broader strategies and human institutions.

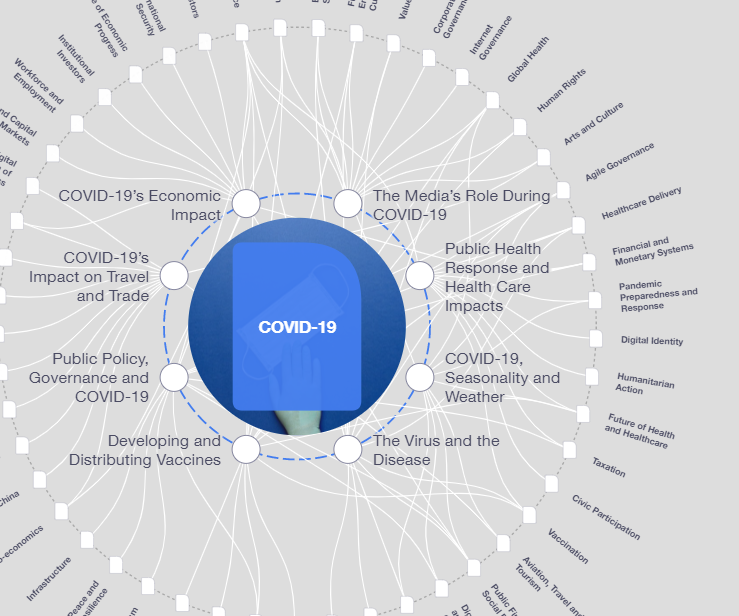

What is the World Economic Forum doing about the coronavirus outbreak?

To grossly oversimplify, any AI/ML model uses vast quantities of historical data to produce powerful predictions about the future. These predictions and insights by definition exceed the ability of human processing, which can be seen as both its strength and its weakness.

- The technical accuracy and quality of those predictions are a function of a) the quality, completeness and lack of bias in the underlying data used to train the model, b) the data used to generate the prediction, c) the design and robustness of the algorithm itself and d) what happens to a model when it encounters real-world data and impact.

- The appropriateness of humans relying on those predictions turns on how well people a) define, select and align use cases with the current capability of the technology (e.g., is the AI/ML tool appropriate for the problem being solved), b) design the models to avoid bias and error and other adverse impacts (intended and unintended), c) understand and contextualize the specific impact of relying on predictions (which differs by use case) and d) commit to the active supervision of a model’s function over time.

In other words, the technical aspects of AI/ML tools are only half the story, the other half being how we – the people – build, use and govern them. And this is where the current COVID-19 crisis presents both a key opportunity and a key challenge: by rushing the adoption of AI for the long term, it also rushes the need for government and corporate leadership to pay attention to how we design and manage powerful, early-stage technologies.

How to protect human rights and values is the subject of robust discussion and early efforts at regulation. Right now, however, businesses and governments are making decisions today about how these tools are used and what impacts they will have. Nevertheless, there are no common standards yet for internal quality or external uses or one-size-fits-all solutions for different applications of AI/ML. So every company and every agency using AI/ML tools will need to develop real-world governance practices – at a use case level – that reflect both their broader missions and quickly-changing market standards.

Otherwise they risk avoidable loss of customer and public confidence, disruption of operations, legal liability and crises of trust and reputation if they do not prioritize the governance of these technologies as part of their broader strategic and oversight work. At some point, it will be unthinkable for businesses or agencies using AI/ML tools not to have a plan or strategy for managing these risks along with the benefits. But that work really needs to start now, as this public health crisis hastens the use of these tools in settings with direct impact on patients, healthcare providers and the rest of us.

COVID-19 and the questions it raises

- Today: Accelerating Medical and Drug Discovery

To be sure, the quality of these tools and their promise varies, but given there is already reliance on AI to accelerate disease insights and drug development, we should be accelerating work on guardrails too.

Among the bigger governance challenges is assessing the reliability, safety, and fairness of such tools. In many instances, a well-designed audit framework would enable leaders to evaluate whether the system is trustworthy but this can be challenging in practice. - Tomorrow: Enabling Population Management and Limiting Disease Spread

Tracking COVID-19 patients and their contacts is widely understood to be central to the effort to contain spread and eventually, loosen the restrictions on our mobility and economic activity. The AI/ML-enabled contact tracing tools that were so effective in Singapore and South Korea are now, in some form, likely coming to the EU and US.

While governments and businesses are partnering to discuss privacy and efficacy considerations, with a goal of delivering both, it’s a false choice to assume that entities must pick one or the other. How this balance will be achieved and maintained will be a function both of what we demand of the technologies’ technical features and what we demand of the developers, data handlers, governments and of each other. Beyond the collection and protection of personal data, the specific purposes for which it is used, for how long and in combination with what other datasets and technologies, and with what guardrails for civil liberties, all present important questions going forward. As others have reminded us, putting into place appropriate AI governance architecture is inherent to the effectiveness of the solution itself. - The Day After Tomorrow: Restarting the Economy, Optimizing Resources and Remote Everything

Beyond specific disease management, post-COVID-19 economies also will need to rely on AI/ML tools to get going. They will be used in everything from matching and assessing employees with opportunities, to reconstructing supply chains stocking, to delivering and customizing education for millions of students. The pressure to adopt them will be great, as businesses restart with a focus on efficiency, flexibility and reducing costs.

One interesting question is how well historical data will make post-COVID-19 predictions. Arguably the processing power of AI/ML is key to business and government grasping the consequences of the profound changes we are experiencing, and quickly enough to make needed adjustments. At the same time, the risks of errors and over-reliance on historical practice are particularly high. This adds to the known risks of bias, runaway results and other under/over performance characteristics. And it adds to the imperative that business integrate these tools into thoughtful systems that incorporate human input, experience and context.

AI Policy into Practice: Managing AI Adoption for Long-term Success

All of this raises the stakes on senior corporate and governmental leadership to understand the use cases for these technologies, their genuine (vs. aspirational) capabilities and the special ways in which these tools – which are designed to outstrip human analytical capacity – must be managed for the resiliency of the whole organization. When does it really make sense to use the technologies, who is using them, how is their data managed, who is responsible for them, how are teams trained to develop, assess and operate, how is compliance-over-time incentivized, measured?

"At some point, it will be unthinkable for businesses or agencies using Artificial Intelligence/Machine Learning tools not to have a plan or strategy for managing these risks along with the benefits."

”There are no packaged or one-size-fits-all solutions. Generalized AI policies are plentiful — some very good — and regulation is on the horizon. Now, however the challenge for businesses and governments is how to operationalize these principles. This will require close, careful work designed to the specific company / government, use cases and technology. And designed to accommodate the break-neck pace of continued change and challenges.

Some of this work is best overseen at the board level, with an eye to developing AI/ML strategies and cultures that encourage governance in the name of resilience and long term viability. Some of this work is best done by management and supporting that strategic and cultural work, along with the operationalization of processes for determining the quality and impact of AI/ML tools and other frontier technologies, and their alignment with their organization’s mission. In all events, to the extent a few boards and executives already have the specific experience needed to exercise oversight duties in this evolving environment, we should develop it quickly in the post-COVID-19 world. Likewise, any real recovery from this crisis will require companies and agencies to develop strategies, policies and plans for specifically when, where and how they will use these technologies (and when they will not).

AI/ML is enabling solutions that were previously unknown and on a timescale previously unimaginable. These solutions will be key to our recovery from the current crisis. Over the longer term, they will also change how we work and interact with each other and with technology, how we construct forecasts and how we share data, how we hire and are hired and who knows what about us. There is a lot to be excited about and we can all support better diagnostics and therapies, less disease spread, more efficient and robust labor markets and supply chains. A quicker recovery will lead to shared benefits. With a commitment to practical, effective governance, we can maintain those benefits for the long-term.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

COVID-19

Related topics:

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Health and Healthcare SystemsSee all

Mansoor Al Mansoori and Noura Al Ghaithi

November 14, 2025